- 이번 글에서는 추론 기반 기법과 Neural Network(신경망)에 데하여 한번 알아 보겠습니다.

통계 기반 기법의 문제점

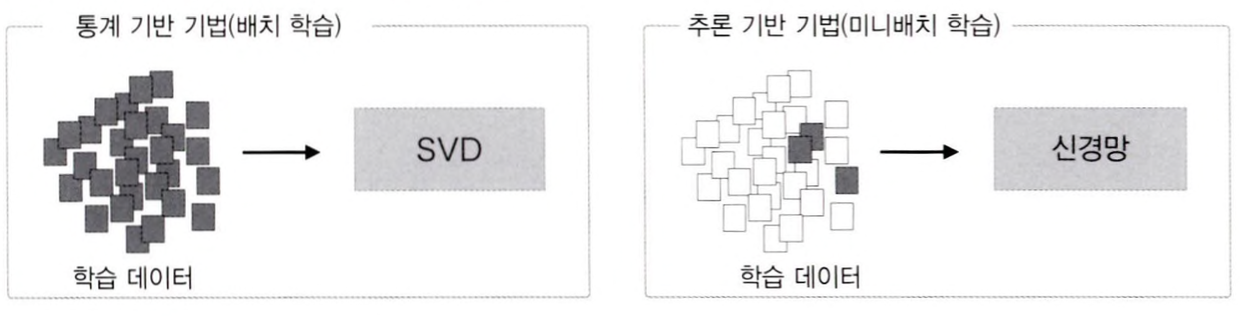

단어를 Vector로 표현하는 방법은 최근에는 크게 두 부류로 나눌 수 있습니다. '통계 기반 기법'과 '추론 기반 기법' 입니다.

- 두 방법이 단어의 의미를 얻는 방식은 서로 다르지만, 그 배경에는 모두 분포 가설이 있습니다.

- 통계 기반 기법에서는 주변 반어의 빈도를 기초로 단어를 표현 했습니다.

- 구체적으로는 단어의 Co-Occurance Matrix(동시 발생 행렬)을 만들고 그 행렬에 특잇값분해(Singular Value Decomposition, SVD)를 적용하여 밀집벡터를 얻습니다.

- 그러나, 이 방식은 대규모 Corpus(말뭉치)를 다룰 때 문제가 발생합니다.

- 일단, 통계 기반 기법은 Corpus(말뭉치) 전체의 통계(Co-Occurance Matrix(동시 발생 행렬), PPMI)를 이용해서 단 한번의 처리 SVD 등)만에 단어의 분산 표현을 얻습니다.

- 추론 기반 기법, 즉 Neural Network(신경망)을 이용하는 경우는 Mini-Batch로 학습 하는것이 일반적입니다.

- Mini-Batch 학습에서는 Neural Network(신경망)이 한번의 Mini-Batch(소량)의 학습 샘플씩 반복하여 학습 후, Weight(가중치)를 갱신해갑니다.

- 추론 기반 기법은 Corpus(말뭉치)의 어휘가 많아 큰 작업을 처리하기 어려운 경우에도 Neural Network(신경망)을 학습 시킬 수 있습니다.

- 데이터를 작게 나눠서 학습하기 때문입니다. 그리고 병렬 계산도 가능하기 때문에 학습 속도도 높일 수 있습니다.

- 예시로 추론 기반 기법을 사용한 것은 Word2Vec 입니다. 아래의 설명글을 달아놓았으니, 참고하실 분들은 참고하세요!

[NLP] Word2Vec, CBOW, Skip-Gram - 개념 & Model

1. What is Word2Vec? Word2Vec은 단어를 벡터로 변환하는데 사용되는 인기있는 알고리즘 입니다. 여기서 단어는 보통 'Token' 토큰 입니다. 이 알고리즘은 단어(Token)들 사이의 의미적 관계를 Vector 공간에

daehyun-bigbread.tistory.com

추론 기반 기법 개요

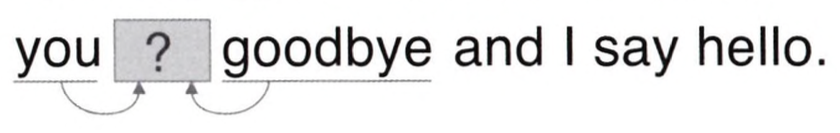

추론 기반 기법에서는 '추론'이 주된 작업입니다.

- 추론이란? 주변 단어(맥략)이 주어졌을때 "?" 에 무슨 단어가 들어가는지를 추측하는 작업입니다.

- 위의 그림처럼 추론 문제를 풀고 학습하는 것이 '추론 기반 기법'이 다루는 문제입니다.

- 이러한 추론 문제를 반복해서 풀면서 단어의 출현 패턴을 학습하는 것입니다.

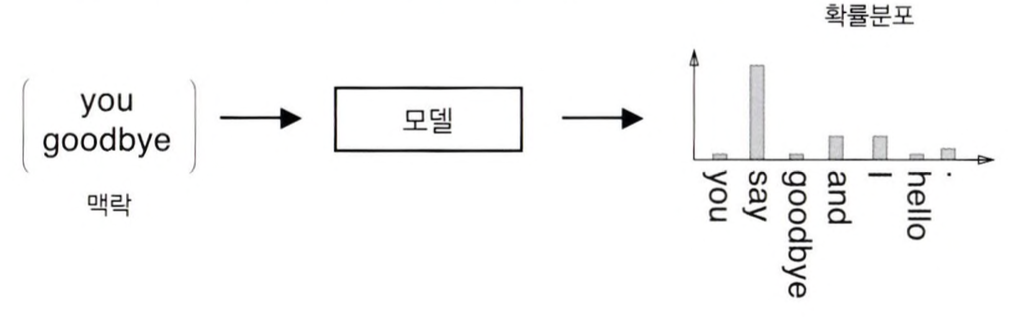

- 여기서 모델은 Neural Network(신경망)을 사용하고, 맥략 정보를 받아서 각 단어의 출현 확률을 출력합니다.

- 이러한 틀 안에서 Corpus를 사용해 모델이 올 바른 추측을 내놓도록 학습시킵니다.

- 그리고 그 학습의 결과로 단어의 분산 표현을 얻는 것이 추론 기반 기법의 전체적인 흐름입니다.

Neural Network(신경망)에서의 단어 처리

지금부터 Neural Network(신경망)을 이용해 '단어'를 처리합니다.

- 근데, 신경망은 "you", "say"같은 단어를 그대로 처리 할 수 없습니다.

- 그래서 단어를 "고정된 길이의 Vector"로 변환해야 합니다. 이때 대표적으로 사용하는 방법이 One-Hot Vector로 변환하는 것입니다.

One-Hot Vector는 Vector의 원소중 하나만 1이고 나머지는 0인 벡터를 말합니다.

- 한번 예시를 들어보겠습니다.

"You say goodbye and I say hello" - 예시 문장

- 예시 문장을 보면 어휘가 7개가 등장합니다. "You", "say", "goodbye", "and", "I", "say", "hello"

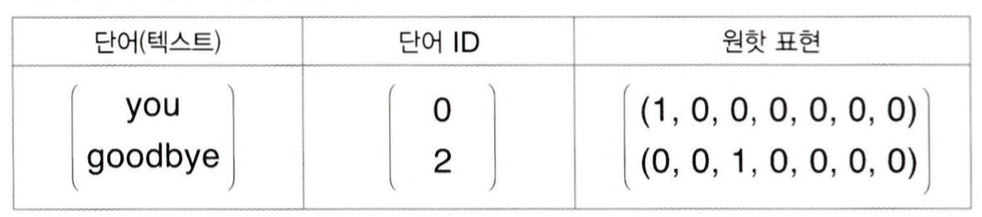

- 이중 두 단어의 One-Hot 표현을 그림에 나타내어 봤습니다.

- 위의 그림처럼 단어는 Text, 단어 ID, 그리고 One-Hot 표현 형태로 나타낼 수 있습니다.

- 표현하는 방법은 총 어휘수만큼 원소를 가지는 Vector를 준비후, Index가 단어 ID와 같은 원소를 1, 나머지는 모두 0으로 설정합니다.

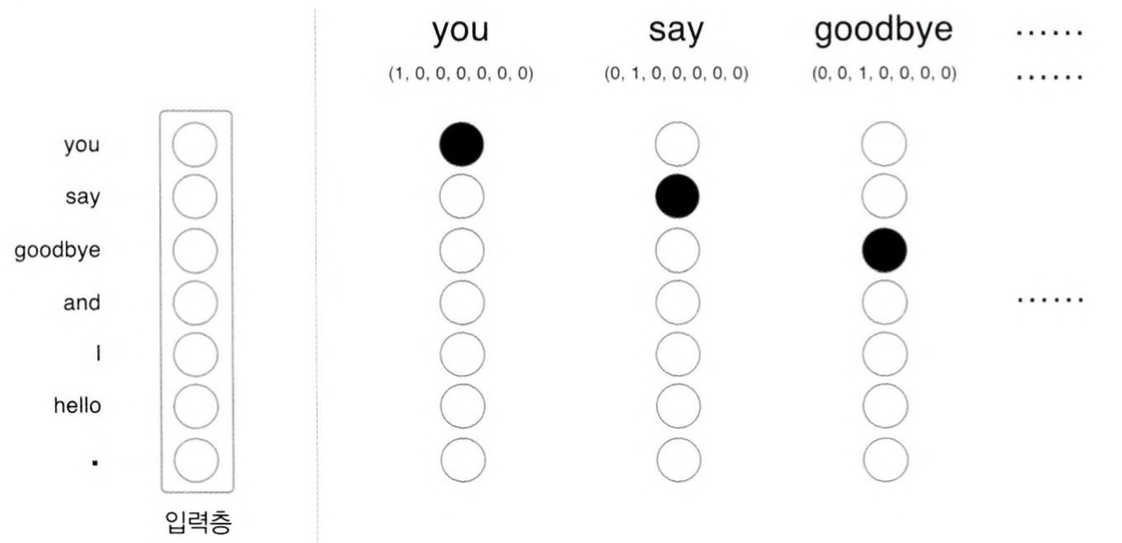

- 이처럼 단어를 고정 길이 벡터로 변환하면 Neural Network(신경망)의 Input Layer는 아래의 그림처럼 Neuron(뉴런)의 수를 고정할 수 있습니다.

- 위의 그림처럼 Input Layer(입력층)의 뉴런은 7개 입니다.

- 이 7개의 뉴런은 차례로 7개의 단어들에 대응합니다.

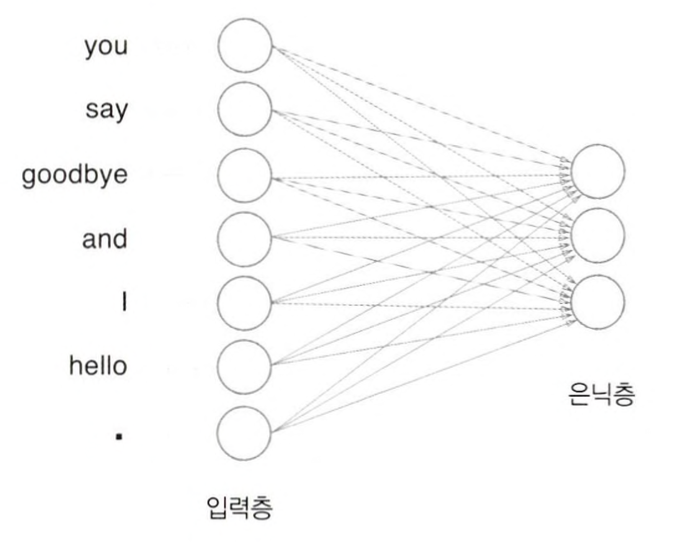

- 그리고 신경망의 Layer는 Vector 처리가 가능하므로, One-Hot 표현으로 된 단어를 Fully-Connected Layer(완전-연결 계층)을 통해 사용할 수 있습니다.

- 여기서 Neural Network(신경망)은 Fully-Connected Layer(완전-연결 계층)이므로 각각의 노드가 이웃 층의 모든 노드와 화살표로 연결되어 있습니다.

- 이 화살표엔 Weight(가중치)가 존재하며, Input Layer의 Neuron(뉴런)과 Weight(가중치)의 합이 Hidden Layer Neuron(은닉층 뉴런)이 됩니다.

Fully-Connected Layer에 의한 변환 Code (by Python)

Fully-Connected Layer에 의한 변환된 코드는 이렇게 적을수 있습니다.

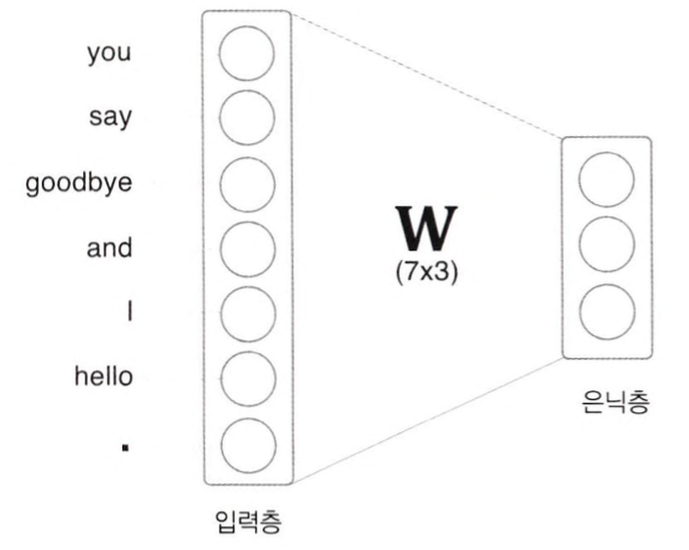

import numpy as np

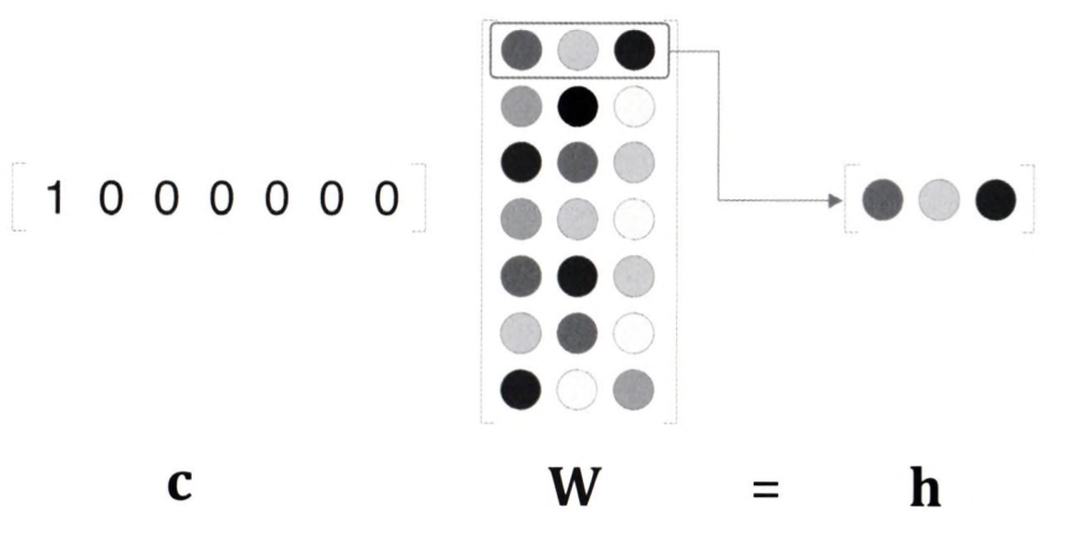

c = np.array([[1, 0, 0, 0, 0, 0, 0]]) # Input(입력)

W = np.random.randn(7, 3) # Weight(가중치)

h = np.matmul(c, W) # 중간 노드

print(h)

# [[-0.70012195 0.25204755 -0.79774592]]- 이 코드는 단어 ID가 0인 단어를 One-Hot 표현으로 표현한 다음, Fully-Connected Layer를 통과하여 변환하는 모습을 보여줍니다.

- c는 One-Hot 표현이며, 단어 ID에 대응하는 원소만 1이고 나머지는 0인 Vector입니다.

- 그리고 앞에서 구현한 Fully-Connected Layer에 의한 변환된 코드도 MatMul 계층으로 수행할 수 있습니다.

import sys

sys.path.append('..')

import numpy as np

from common.layers import MatMul

c = np.array([[1, 0, 0, 0, 0, 0, 0]])

W = np.random.randn(7, 3)

layer = MatMul(W)

h = layer.forward(c)

print(h)

# [[-0.70012195 0.25204755 -0.79774592]]- 이 코드는 MatMul Layer에 Weight(가중치) W를 설정하고 forward() Method를 호출해 Forward Propagation(순전파)를 수행합니다.

MatMul Layer

기본적인 신경망 Layer에서 행렬 곱셈 연산을 수행하고, Backpropagation(역전파)를 통해 Gradient(기울기)를 계산하는 역할을 합니다.

import numpy as np

class MatMul:

def __init__(self, W):

"""

클래스 초기화 메서드. 가중치 행렬 W를 받아 초기화합니다.

Parameters:

W (numpy.ndarray): 가중치 행렬

"""

self.params = [W] # 가중치 행렬 W를 파라미터로 저장

self.grads = [np.zeros_like(W)] # W와 같은 형태의 영행렬로 기울기를 초기화

self.x = None # 입력 x를 저장할 변수 초기화

def forward(self, x):

"""

순방향 전파 메서드. 입력 x와 가중치 W의 행렬 곱을 계산합니다.

Parameters:

x (numpy.ndarray): 입력 데이터

Returns:

out (numpy.ndarray): 입력과 가중치의 행렬 곱 결과

"""

W, = self.params # 파라미터에서 가중치 W를 꺼냄

out = np.dot(x, W) # 입력 x와 가중치 W의 행렬 곱 수행

self.x = x # 입력 x를 인스턴스 변수에 저장

return out # 결과 반환

def backward(self, dout):

"""

역방향 전파 메서드. 출력에 대한 기울기 dout을 받아 입력과 가중치의 기울기를 계산합니다.

Parameters:

dout (numpy.ndarray): 출력 기울기

Returns:

dx (numpy.ndarray): 입력에 대한 기울기

"""

W, = self.params # 파라미터에서 가중치 W를 꺼냄

dx = np.dot(dout, W.T) # 출력 기울기와 가중치의 전치 행렬을 곱하여 입력 기울기 계산

dW = np.dot(self.x.T, dout) # 입력의 전치 행렬과 출력 기울기를 곱하여 가중치 기울기 계산

self.grads[0][...] = dW # 계산된 가중치 기울기를 grads 리스트에 저장

return dx # 입력 기울기 반환- __init__ : 클래스의 초기화 Method로, 가중치 행렬 W를 받아서 이를 params 리스트에 저장합니다.

- 또한, W와 같은 형태의 영행렬을 grads 리스트에 저장하여 기울기를 초기화합니다.

- x는 나중에 순방향 전파 시 입력 값을 저장하기 위해 초기화됩니다.

- forward : Forward Propagation(순전파)를 수행하는 Method 입니다.

- 입력 x와 가중치 W의 행렬 곱을 계산하고 그 결과를 반환합니다.

- 이 과정에서 입력 x를 인스턴스 변수에 저장하여 나중에 역방향 전파에서 사용할 수 있도록 합니다.

- backward: Back Propagation(역전파)를 수행하는 Method 입니다.

- 출력에 대한 기울기 dout을 입력받아, 입력에 대한 기울기 dx와 가중치에 대한 기울기 dW를 계산합니다.

- 계산된 dW는 grads 리스트에 저장되고, dx는 반환됩니다.

부사: 뒤로, 거꾸로, 뒤로 향하여 형용사: 싫어하는, 뒤늦은, 뒤쪽의

'📝 NLP (자연어처리) > 📕 Natural Language Processing' 카테고리의 다른 글

| [NLP] RNNLM - RNN을 사용한 Language Model (0) | 2024.06.02 |

|---|---|

| [NLP] BPTT (Backpropagation Through Time) (0) | 2024.05.23 |

| [NLP] 통계 기반 기법 개선하기 (0) | 2024.05.20 |

| [NLP] Thesaurus(시소러스), Co-occurence Matrix(동시발생 행렬) (0) | 2024.05.18 |

| [NLP] Transformer Model - 트랜스포머 모델 알아보기 (0) | 2024.03.07 |