1. 기본 RNN 모델 (Vanilla RNN Model)의 한계

RNN부분을 설명한 글에서 기본 RNN Model을 알아보고 구현해 보았습니다.

- 보통 RNN Model을 가장 단순한 형태의 RNN 이라고 하며 바닐라 RNN (Vanilla RNN)이라고 합니다.

- 근데, Vanilla RNN 모델에 단점으로 인하여, 그 단점들을 극복하기 위한 다양한 RNN 변형 Model이 나왔습니다.

- 대표적으로 LSTM, GRU 모델이 있는데, 일단 이번글에서는 LSTM Model에 대한 설명을 하고, 다음 글에서는 GRU Model에 대하여 설명을 하도록 하겠습니다.

Vanilla RNN은 이전의 계산 결과에 의존하여 출력 결과를 만들어 냅니다.

- 이러한 방식은 Vanilla RNN은 짧은 Sequence에는 효과가 있지만, 긴 Sequnce는 정보가 잘 전달이 안되는 현상이 발생합니다.

- 이유는 RNN은 시퀀스 길이가 길어질수록 정보 압축에 문제가 발생하기 때문입니다.

- RNN을 설명한 글에서도 나와있듯이, RNN은 입력 정보를 차례대로 처리하고 오래 전에 읽었던 단어는 잊어버리는 경향이 있기 때문입니다.

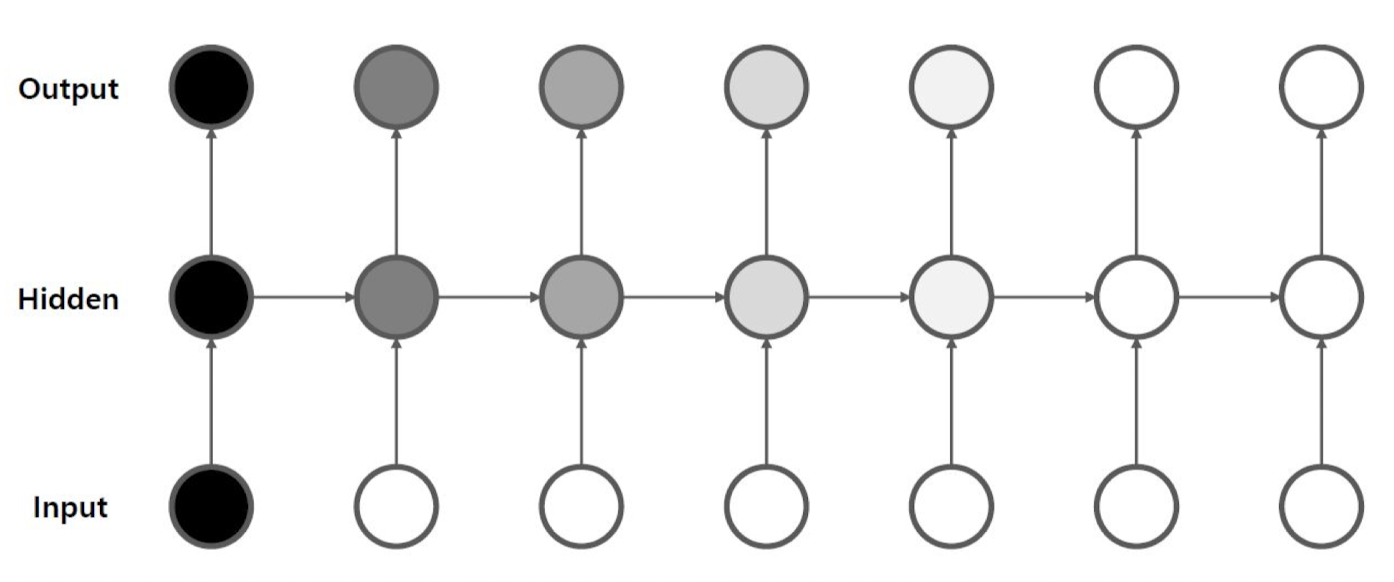

위의 그림을 보면, Vanilla RNN Model이 돌아갈때 첫번째로 들어가는 input값 검은색이 진하게 보이는건, 들어가는 값의 기억력을 나타낸것입니다.

- 근데, 점차 다음 시점으로 넘어갈수록 색갈이 흐려지는것을 볼 수 있습니다.

- 이것은 다음 시점으로 넘어가면 넘어갈수록 Vanilla RNN Model의 첫번째 input이 값이 손실되어지는 과정을 나타내었습니다. 다른말로는 점점 까먹는다 라고 볼수도 있겠네요.

- 결론은 첫번째로 들어간 input의 기억력은 점점 손실되고, 만약 시점이 길다고 하면 이 RNN 모델에 대한 영향력은 점점 사라진다고 볼 수 있습니다.

2. Long-Term Dependency 현상

이 현상을 Long-Term Dependency (장기 의존성 문제) 현상이라고 합니다.

- Long-Term Dependency 현상은 Time step이 지나면서 입력값의 영향력이 점점 감소한다.

- Sequence의 길이가 길어질수록, 오래 전 입력 값의 정보를 제대로 반영하지 못한다..

💡 Long-Term Dependency 현상

한번 예시로 들어서 설명해 보겠습니다.

- Long-Term Dependency 현상이 나타나면서 문제가 되는 경우는 첫번째나 앞쪽에 들어간 input이 중요한 정보 일때 입니다.

💡 example of Long-Term Dependency 현상

"요즘 일본가는 비행기가 인천공항에서 나리타 공항 가는 비행기값이 김포공항에서 하네다 공항 가는 비행기 값보다 더 비싸더라. 그러면 싼 곳으로 가야겠는데. 그래서 나는 "

- 글을 보면 빈칸에 다음 단어를 예측하기 위해서는 장소에 대한 기억이 있어야 합니다.

- 근데, 장소 정보에 대한 "인천공항"은 앞쪽에 위치하고 있습니다.

- RNN Model이 충분한 기억력이 없다면? 아마 다음 단어를 엉뚱하게 예측 할 것 입니다.

- 이 현상을 Long-Term Dependency 현상. 장기 의존성 문제 현상 이라고 합니다.

- 그리고 RNN Model이 동작하는 과정을 한번 보여주면 이해를 하는데 도움이 될겸, 한번 Train & Test 하는 과정을 설명해 보겠습니다.

3. RNN Training & Test Example

RNN Training Example

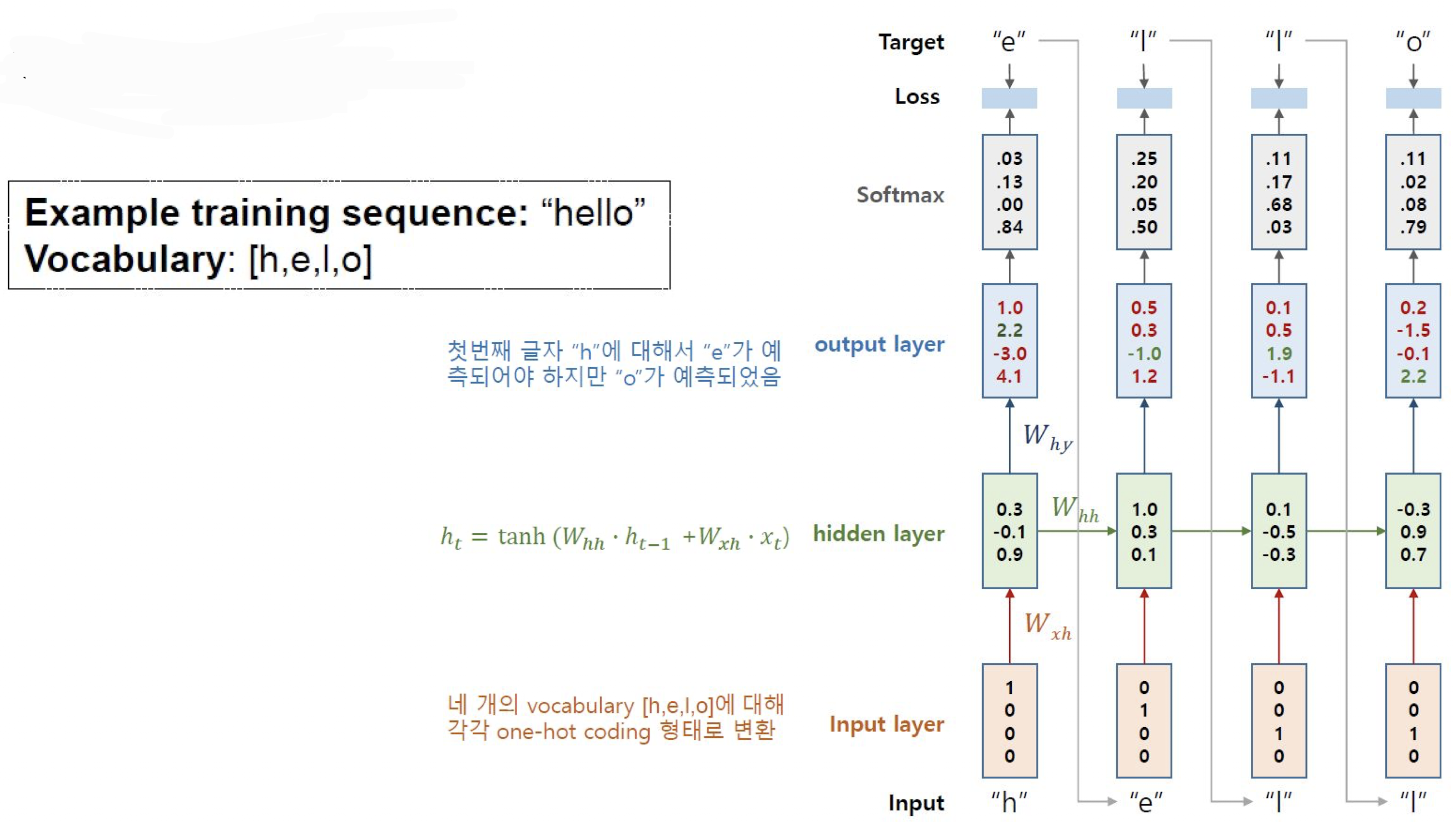

보통 RNN Model은 훈련 데이터셋과 같은 스타일의 문자열을 생성하는 모델로 글자 단위로 훈련을 합니다.

- 예를 들면, "hello" 라는 단어를 만들고 싶어서 "h" 를 RNN Model에 첫번째 input을 주면 "ello"를 생성하는 과정입니다.

- 여기서 훈련 데이터셋은 글자 or 단어사전 (Vocab)으로 줍니다.

- Example training Sequence: "hello"

- Vocabulary: [h, e, l, o]

- 이제 input으로 준 값 "h"을 이용해서 4개의 Vocabulary: [h, e, l, o]를 One-hot-encoding 형태로 변환하겠습니다.

- One-hot-encoding 형태로 변환한 값과 이전 시점의 hidden state를 이용하여 hidden layer 연산을 수행합니다.

- 여기서 가중치 행렬을 Whh라고 하고, input값 (여기서는 One-hot-encoding 값)과 hidden state를 곱합니다.

- 그림에서 나와 있지는 않지만 편향(bias) 값도 더해서 활성화 함수를 통과시켜 새로운 hidden state를 얻습니다.

hidden layer 연산: ht= σ(Whh⋅ht−1 + Why⋅xt + bh), *[σ는 활성화 함수입니다. - 여기서는 Softmax 함수, bh는 편향(bias)]

- 그리고, hidden layer (은닉층) 연산 수식을 사용하여 값을 도출한후 가중치 행렬 Why (input 값 * hidden state)는 output layer (출력층)에 전달을 해주고, Whh(input 값 * hidden state)는 다음 시점(time step)으로 전달을 해줍니다.

보충설명: Whh는 은닉층 (hidden layer)간의 가중치, Why는 은닉층 (hidden layer) 에서 출력층 (output layer)으로의 가중치를 나타냅니다.

출력층으로 전달되는 가중치 행렬 Why 수식: xt = Why⋅ht [yt: 현재 시점의 출력값, ht는 현재 시점의 hidden state]

다음 시점으로 전달되는 가중치 행렬 Whh 수식: ht+1 = Whh⋅ht [ht+1은 다음 시점의 hidden state]

- 그 다음에, softmax 함수를 이용하여 확률값을 계산한후, 4개의 vocab [h, e, l ,o] 중에 가장 확률값이 높은걸 Target으로 잡아주고 다음 input으로 넣어줍니다.

- 그리고 다음 input을 가지고 다시 One-hot-encoding, 가중치 행렬 계산해서 output layer, 다음 시점으로 전달, softmax 함수에 넣어줘서 Target값 산출.. 계속 이 과정이 input Sequence의 길이 만큼 반복된다고 보시면 됩니다.

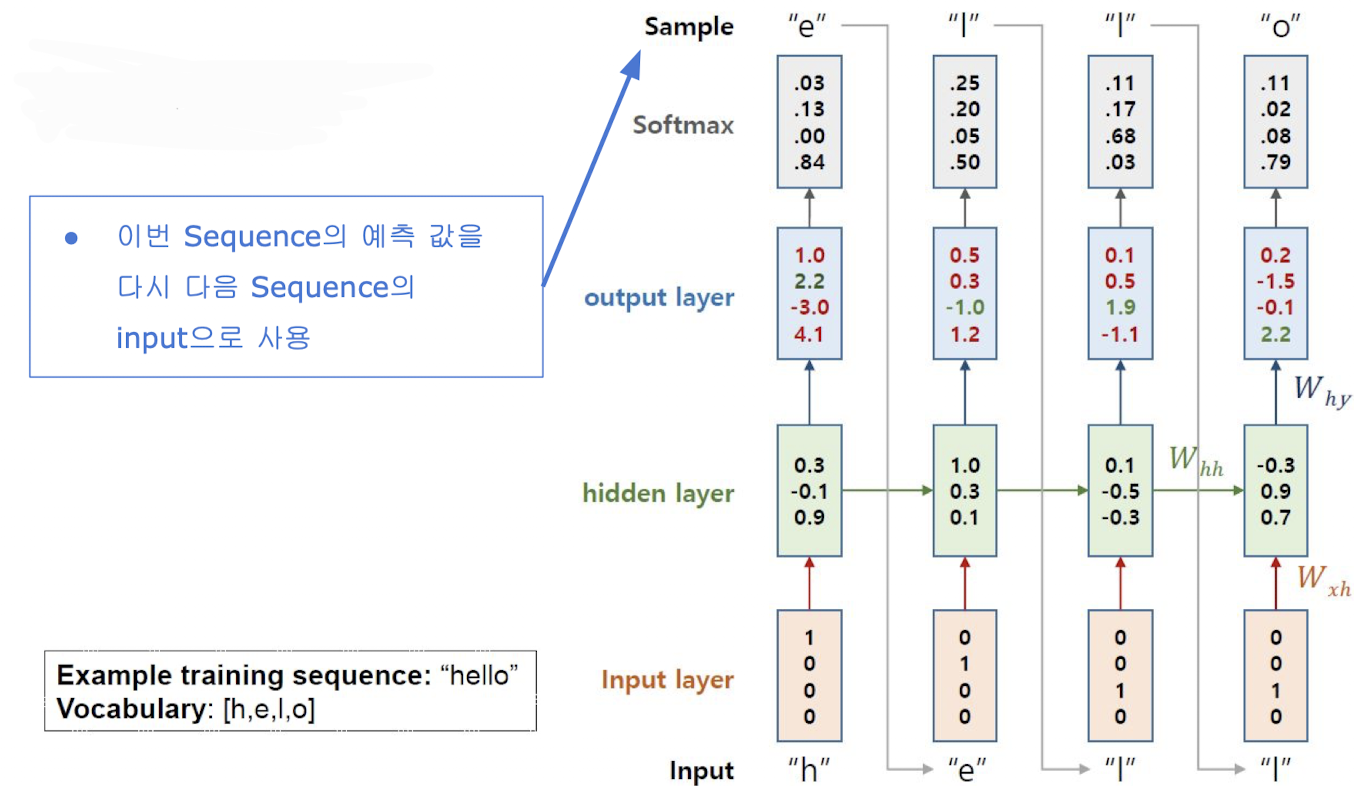

RNN Test Example

RNN Test 과정 예시를 한번 보도록 하겠습니다.

- RNN Test 과정도 RNN Training 과정이랑 비슷합니다. 다만 Training 과정에서 빼먹은 설명을 보충 하도록 하겠습니다.

- 위의 그림을 보면 한번 과정을 돌고 Sample값 "e"가 나온것을 볼 수 있습니다. 이걸 2번째 input으로 넣습니다.

- 그리고 RNN의 학습과정을 보면 input으로 "hell"을 넣으면 출력값으로 "ello"가 나온다. 이렇게 보시면 될거 같습니다.

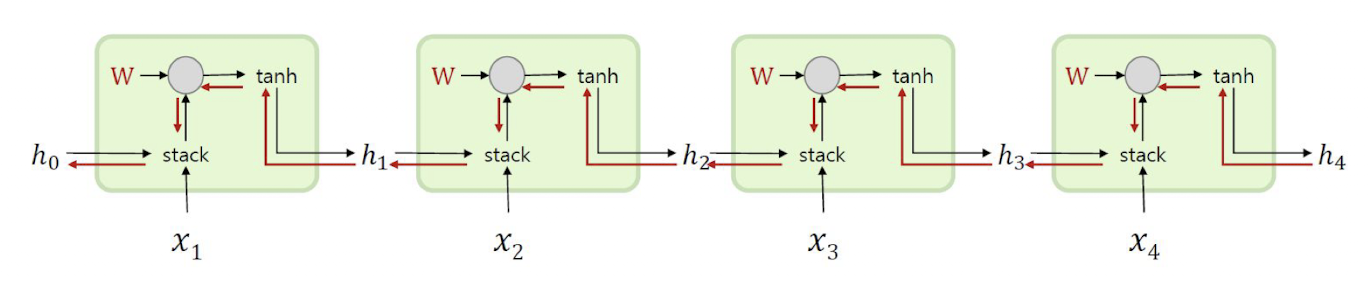

4. Vanilla RNN 내부

Vanilla RNN 내부를 열어서 한번 보겠습니다.

- Vanilla RNN의 내부는 이 글 앞에서 설명했던 RNN Training & Test Example을 이해하고 보시면 이해가 될겁니다.

ht= tanh(Whh⋅ht−1 + Why⋅xt + bh) *[tanh는 활성화 함수입니다. - 여기서는 Softmax 함수, bh는 편향(bias)]

- 설명을 해보면 Whh는 은닉층 (hidden layer)간의 가중치

- Why는 은닉층 (hidden layer) 에서 출력층 (output layer)으로의 가중치를 나타냅니다.

- 출력층으로 전달되는 가중치 행렬 Why 수식: xt = Why⋅ht [xt: 현재 시점의 출력값, ht는 현재 시점의 hidden state]

- 다음 시점으로 전달되는 가중치 행렬 Whh 수식: ht+1 = Whh⋅ht 입니다.

- [ht+1은 다음 시점의 hidden state, 수식에서 나온 ht-1은 이전 시점의 hidden state]

다시 그림을 보고 설명 한다면

- 현재 시점 xt에서의 input 값을 받습니다.

- ht-1 (이전 시점에서 hidden state) 값을 옵니다.

- 가중치 행렬 W(Whh, Why)를 곱하여 계산합니다.

- 그 다음에 활성화 함수 tanh를 적용합니다.

- 최종적으로 계산된 값이 현재 시점 t의 hidden state ht가 됩니다.

- Vanilla RNN은 xt와 ht-1이라는 2개의 input이 각각 가중치 행렬 W(Whh, Why)와 곱해서 Memory Cell의 입력이 됩니다.

- 그리고 이를 tanh (하이퍼볼릭탄젠트) 함수의 input으로 사용하고, 이 값들은 hidden layer(은닉층)의 출력인 hidden state(은닉 상태)가 됩니다.

'📝 NLP (자연어처리) > 📕 Natural Language Processing' 카테고리의 다른 글

| [NLP] GRU Model - LSTM Model을 가볍게 만든 모델 (0) | 2024.01.30 |

|---|---|

| [NLP] LSTM - Long Short Term Memory Model (0) | 2024.01.29 |

| [NLP] RNN (Recurrent Netural Network) - 순환신경망 (0) | 2024.01.22 |

| [NLP] Seq2Seq, Encoder & Decoder (0) | 2024.01.19 |

| [NLP] Pre-Trained Language Model - 미리 학습된 언어모델 (0) | 2024.01.18 |