1..sequence-to-sequence

💡 트랜스포머(Transformer) 모델은 기계 번역(machine translation) 등 시퀀스-투-시퀀스(sequence-to-sequence) 과제를 수행하기 위한 모델입니다.

- sequence: 단어 같은 무언가의 나열을 의미합니다.

- 그러면 여기서 sequence-to-sequence는 특정 속성을 지닌 시퀀스를 다른 속성의 시퀀스로 변환하는 작업(Task) 입니다.

- 그리고 sequence-to-sequence는 RNN에서 many-to-many 모델을 사용하고 있는데, RNN은.. 추후에 설명하도록 하겠습니다.

💡 example

기계 번역: 어떤 언어(소스 언어, source language)의 단어 시퀀스를 다른 언어(대상 언어, target language)의 단어 시퀀스로 변환하는 과제

- source sequence 길이 (단어 6개), target sequence 길이 (단어 10개)가 다르다는 점이 있습니다.

- 주의 사항: sequence-to-sequence는 source와 target의 길이가 달라도 수행하는데 지장이 없어야 합니다.

- Source sequence, Target sequence의 길이가 다르면 모델을 실행하는데 문제가 생기거나 실행이 안될수도 있습니다.

2. Sequence-to-sequence 기본사항

Sequence-to-sequence 기계번역을 할때 input sequence를 넣어서 진행합니다.

- 앞에 3-1에서 설명했듯이, input sequence 수식은 x1, x2, ..., xn, output sequence 수식은 y1, y2, ..., yn 입니다.

- input, output sequence의 길이는 서로 다를수 있습니다.

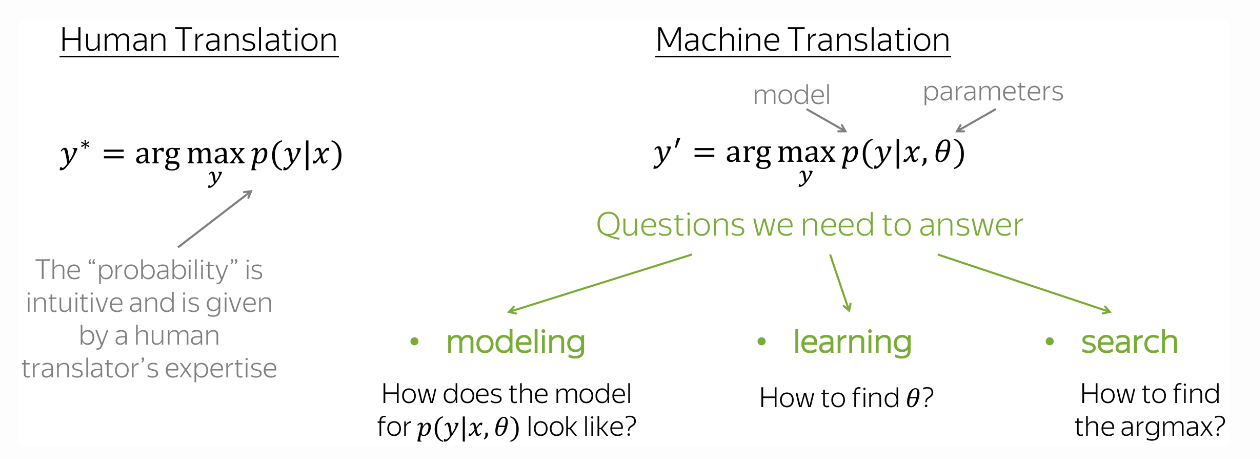

여기서 번역(Translation)은 주어진 input에서 가장 가능성(확률)이 높은 순서 (서열)을 찾는것으로 생각할 수 있습니다.

- 조건부 확률의 수식 -> 사람이 번역하는 느낌을 수식화 하면: y* = argmax p(y|x)이다.

- 그렇지만 기계로 번역을 한다고 하면 수식은 p(y|x, θ)[model를 의미] -> 이 수식을 사용하여 기계로 번역할때 이 함수를 사용하여 argmax를 찾습니다.

- *여기서 θ(세타)는 일부 매개변수(parameter) 입니다.

여기서 기계로 번역을 하는 시스템을 사용해보려면 3가지에 대하여 알고 있어야 합니다.

- Modeling Part: Model은 p(y|x, θ) 수식을 사용하여 어떻게 동작하는지?

- learning(학습) Part: parameter θ를 어떻게 찾을것인지

- inference part: y에 대한 최선의 방법을 어떻게 찾을것인지?

3. Sequence-to-sequence 구성

- 한번 Sequence-to-sequence (seq2seq) 모델이 어떻게 구성되어 있는지 한번 알아보겠습니다.

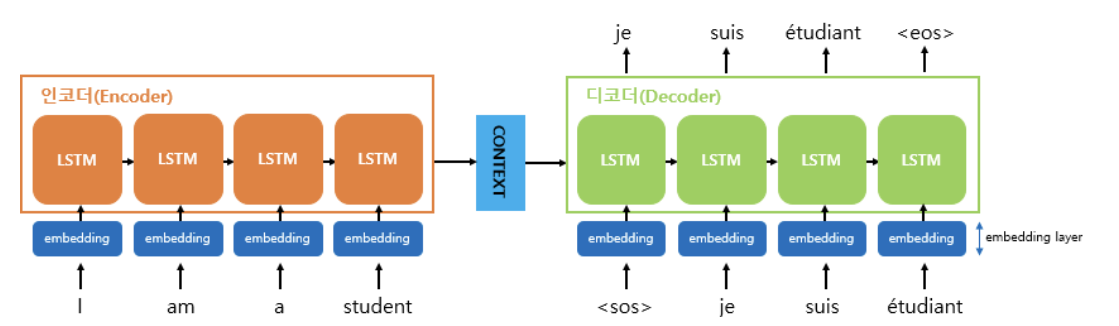

Sequence-to-sequence (seq2seq)를 수행하는 Model들은 대개 인코더(encoder) 와 디코더(decoder) 두 개 파트로 구성이 되어 있습니다.

Encoder

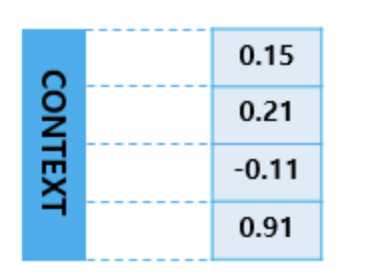

- Encoder는 입력 문장의 모든 단어들 (즉, Source Sequence)을 순차적으로 입력받은 뒤에 마지막에 이 모든 단어들을 압축해서 하나로 Vector화를 합니다.

- 이를 컨텍스트 벡터(context vector)라고 합니다.

- 입력 문장의 정보(Source Sequence)가 하나의 Context vector로 모두 압축이 된후, Encoder는 컨텍스트 벡터를 Decoder로 보냅니다.

- Context Vector는 보통은 수백개의 이상의 차원(dimension)으로 구성되어 있습니다.

Decoder

- Decoder는 context vector를 받아서 번역된 단어를 한 개씩 순서대로 출력합니다.

RNN, LSTM

이번에는 Encoder, Decoder의 안으로 한번 들어가보겠습니다.

- Encoder, Decoder는 모두 RNN 방식으로 사용을 합니다.

- 여기서 RNN(Recurrent Neural Network)은 순차적인 데이터를 처리하기 위해 설계된 신경망 구조입니다.

- 또한 Sequence 데이터의 내부 상태를 보존하면서 정보를 처리할 수 있도록 설계되었습니다.

- 그런데, RNN은 Sequence 데이터의 길이가 길어지면 과거의 정보를 잘 기억하지 못한다는 특징이 있습니다. -> aka. Vanishing Gradient (단기 기억 문제)

- Sequence 데이터의 길이가 길어지면 앞에 있는 Sequence를 까먹는 경우...

- 그래서 Encoder, Decoder에는 RNN을 변형한 LSTM이라는 RNN을 번형한 모델을 사용합니다.

LSTM (Long Short-Term Memory) - 다른 글에서 더 자세히 설명 하도록 하겠습니다.. RNN이랑 같이

- Vanishing Gradient (단기 기억문제)를 해결하기 위한 RNN의 번형 모델 입니다.

- 달라진 방식은 RNN에서는 은닉 상태(hidden state)만 사용되었지만, LSTM은 셀 상태를 사용하여 장기적은 의존성을 더 잘 학습할수 있도록 설계되었습니다.Encoder, Decoder의 내부는 2개의 RNN 입니다.

- input sequence(문장)을 받는 RNN셀을 Encoder, output sequence(문장)을 출력하는 RNN셀을 Decoder라고 합니다.

- 위의 그림에서는 Encoder의 RNN 셀을 초록색으로, 디코더의 RNN 셀을 초록색으로 표현합니다.

- 근데, 그냥 RNN셀로 구성하는것이 아니고, LSTM 셀 또는 GRU 셀로 구성됩니다.

Encoder 수행 과정

Encoder

- Encoder는 input sequence(문장)는 단어 Token화를 해서 단어 단위로 나누고, 각각 토큰은 RNN 셀의 각 시점의 input이 됩니다.

- Encoder RNN 셀은 모든 단어를 입력받은 뒤에 인코더 RNN 셀의 마지막 시점의 은닉 상태(hidden state)를 Decoder RNN 셀, Context Vector로 넘겨줍니다.

- Context Vector 는 디코더 RNN 셀의 첫번째 은닉 상태에 사용됩니다.

Context Vector

- 위의 그림을 보면 Encoder의 각각LSTM 모델에 Text를 Vector화 할때 Word Embedding이 사용됩니다.

- 즉, seq2seq에서 사용되는 모든 단어는 Embedding Vector화 후 LSTM 모델에 input으로 사용됩니다.

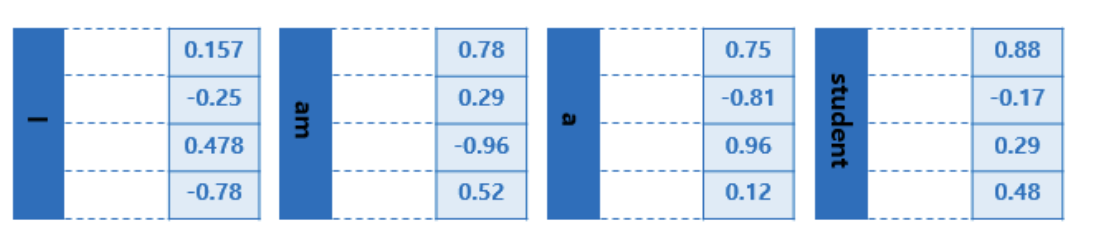

- 아래 그림은 모든 단어에 대해서 enbedding을 거치게 하는 단계인 임베딩 층(embedding layer)의 모습을 보여줍니다.

Encoder에서의 RNN 셀

- NLP에서 Text를 Vector화 할때, Word Embedding이 사용된다고 했습니다.

- 그리고 Embedding Vector는 수백개의 차원을 가진다고 했습니다, 아래 그림에서는 Embedding Vector는 4개이지만 원래는 수백개의 차원을 가질수 있습니다.

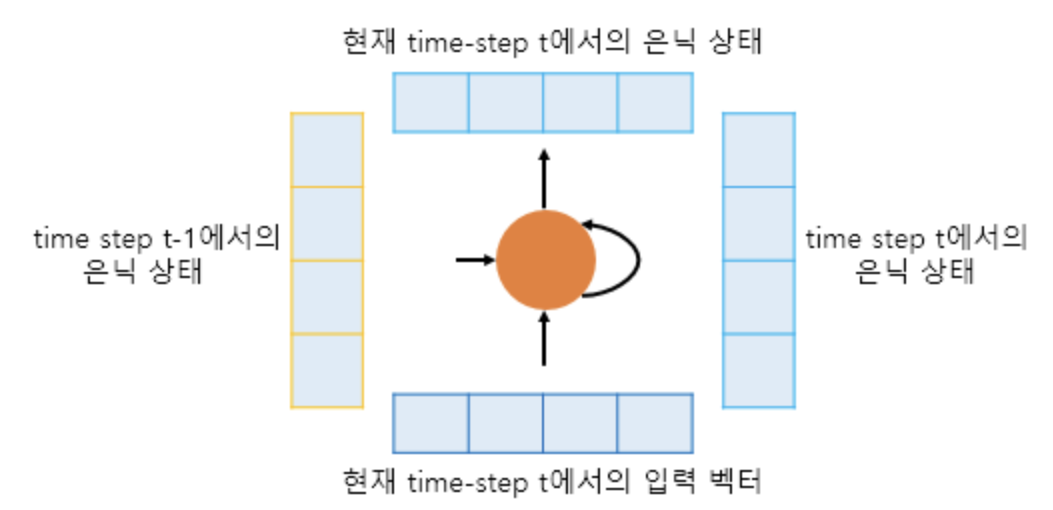

- 그리고 RNN의 셀은 time-step마다 2개의 input을 받습니다.

- Time Step을 t라고 하면, RNN 셀은 t-1에서 hidden state (은닉 상태)와 t에서 input vector를 input으로 받고, t에서 hidden state로 만듭니다.

- 그때, t에서의 hidden state는 위의 다른 hidden layer(은닉층) or output layer(출력층)이 있으면, 위의 층으로 보내거나, 필요없는 경우는 그냥 무시합니다.

- 그리고 RNN 셀은 t+1의 RNN셀(다음시점)에 input으로 현재 t에서의 hidden state를 input으로 보냅니다.

이런 구조에서는 t의 hidden state (은닉 상태)는 과거 시점에서의 동일한 RNN 셀의 모든 hidden state의 값의 영향들이 누적된 값이라고 할수 있습니다.

- 그러면 Context Vector는 Encoder에서 마지막 RNN셀의 hidden state값을 의미하며, 입력받은 문장 들의 단어 Token의 요약된 정보들을 가지고 있습니다.

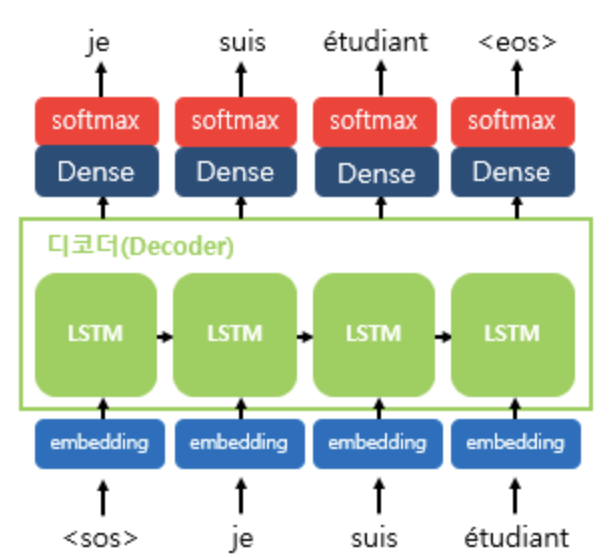

Decoder 수행 과정

Decoder

- Decoder는 RNN Language Model - RNNLM 입니다.

- 개념을 설명드리면, Decoder는 초기 입력으로 문장을 시작할때 <sos> 라는 Token이 들어갑니다.

- <sos> 가 입력되면, 다음에 등장할 단어중에서 확률이 높은 단어를 예측합니다.

Decoder에서의 RNN 셀

- Decoder는 Encoder에서의 마지막 RNN셀의 hidden state인 Context Vector를 hidden state값으로 사용합니다.

- Decoder의 첫번째 RNN 셀은 이 첫번째 hidden state값 이랑 현재 Time-step t에서의 입력값인 <sos>로부터 다음에 등장할 단어를 예측합니다.

- <sos> 다음에 등장할 단어, 즉 예측한 단어는 t의 다음 시점인 t+1 RNN에서의 input 값이 됩니다.

- 그리고 t+1에서의 RNN 또한 이 input값 t에서의 은닉 상태로부터 t+1에서의 output vector -> 다음에 등장할 단어를 예측합니다.

- Seq2seq 모델은 선택할 수 있는 모든 단어들 중에서 하나의 단어를 골라서 예측을 해야 합니다.

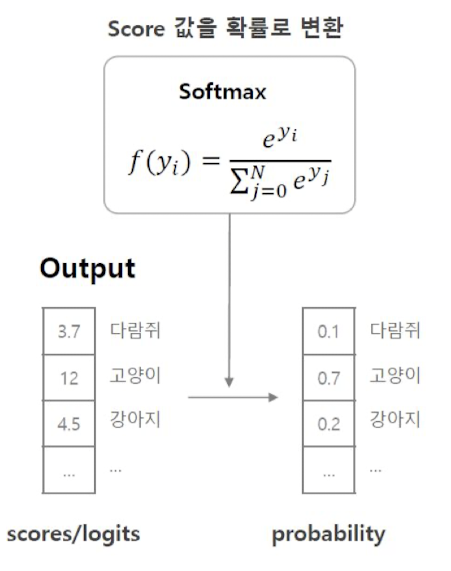

- 이 예측을 softmax라는 함수를 사용해서 예측합니다.

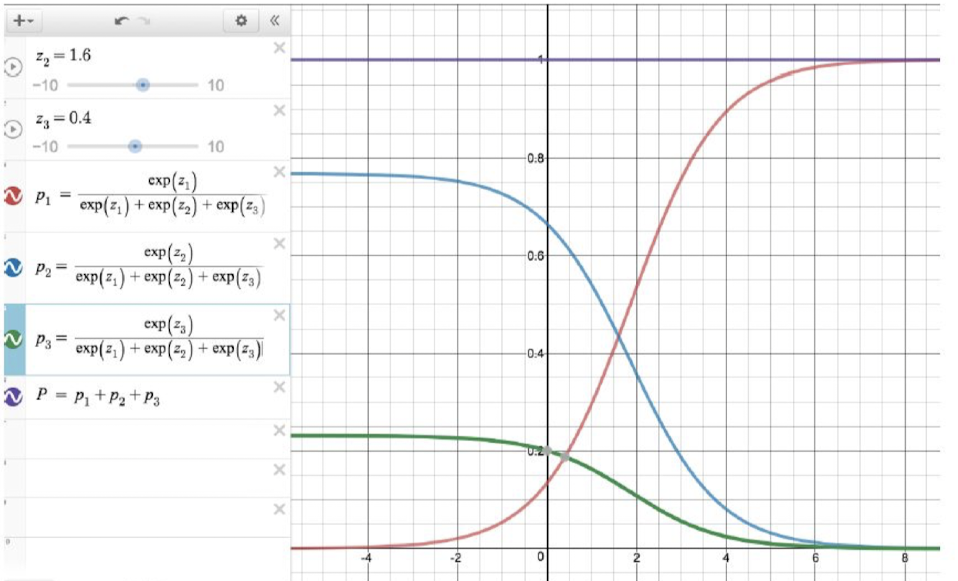

Softmax 함수

- 소프트맥스(Softmax) 함수는 다중 클래스 분류 문제에서 사용되는 활성화 함수입니다.

- 이 함수는 Vector의 값을 확률 분포로 변환합니다.

- 특징은 각 요소의 값은 0~1 사이이며, Input Vector의 모든 요소의 값은 1입니다.

- 그리고 input Vector의 가장 큰 값이 output vector에서도 가장 큰 확률을 가집니다.

Softmax를 함수로 사용해서 Score의 값을 확률값으로 변환할 수 있습니다.

다시 Decoder로 돌아와서 설명해보겠습니다.

- Decoder에서 각 time step의 RNN셀에서 Output Vector(그림에서 왼쪽 그림)를 이용합니다.

- 해당 Output Vector는 Softmax 함수를 이용해서 Output Sequence의 각 단어별 확률값을 반환하고, 그 확률값을 이용해서 Decoder는 출력 단어를 결정합니다.

'📝 NLP (자연어처리) > 📕 Natural Language Processing' 카테고리의 다른 글

| [NLP] Vanilla RNN Model, Long-Term Dependency - 장기 의존성 문제 (0) | 2024.01.23 |

|---|---|

| [NLP] RNN (Recurrent Netural Network) - 순환신경망 (0) | 2024.01.22 |

| [NLP] Pre-Trained Language Model - 미리 학습된 언어모델 (0) | 2024.01.18 |

| [NLP} Tokenization - 토큰화하기 (0) | 2024.01.18 |

| [NLP] Building a vocabulary set - 어휘 집합 구축하기 (0) | 2024.01.18 |