이번에는 "Parameter-Efficient Transfer Learning for NLP" 논문을 한번 리뷰해보겠습니다.

- 논문 링크

Parameter-Efficient Transfer Learning for NLP

Fine-tuning large pre-trained models is an effective transfer mechanism in NLP. However, in the presence of many downstream tasks, fine-tuning is parameter inefficient: an entire new model is required for every task. As an alternative, we propose transfer

arxiv.org

Abstract

대규모 사전 학습 모델을 Fine-tuning하는 것은 NLP에서 효과적인 전이 방법이지만, 다수의 다운스트림 작업이 있을 경우 비효율적입니다. 각 작업마다 새로운 모델을 학습시켜야 하기 때문입니다. 이를 해결하기 위해 저자들은 Adapter Modules을 제안합니다.

Adapter Modules은 다음과 같은 장점을 가집니다:

- 컴팩트하고 확장 가능: 각 작업마다 극소량의 매개변수만 추가됩니다.

- 효율적인 전이: 새로운 작업을 추가할 때 기존 작업을 다시 학습할 필요가 없습니다.

- 매개변수 공유: 원래 네트워크의 매개변수를 고정하여 높은 수준의 매개변수 공유가 가능합니다.

Adapter Modules을 BERT Transformer 모델에 적용하여 GLUE Benchmark를 포함한 26개의 다양한 텍스트 분류 작업에서 테스트한 결과, 거의 최신 성능을 유지하면서도 작업당 매개변수 추가가 매우 적었습니다. 특히 GLUE에서는 전체 Fine-tuning 성능 대비 0.4% 이내의 성능을 유지하면서 작업당 3.6%의 매개변수만 추가했습니다. 반면, 전통적인 Fine-tuning 방법은 작업당 100%의 매개변수를 학습시켜야 했습니다.

결론: Adapter Module을 활용한 Transfer Learning 기법을 제안합니다.

- Transfer Learning 관련한 개념은 아래 글에 작성해 놓았으니 참고해주세요!

[DL] Transfer Learning - 전이 학습

Transfer Learning, 즉 전이 학습은 ML(머신 러닝)과 DL(딥러닝)에서 기존의 Pre-Training 된 모델을 새로운 작업에 재사용하는 기법입니다. 이 방법은 특히 대규모 데이터셋에서 학습된 모델을 작은 데이

daehyun-bigbread.tistory.com

Introduction

이 논문에서 작업들이 스트림으로 도착하는 Online Setting을 다룹니다.

"In this paper we address the online setting, where tasks arrive in sequence"

여기서의 Online Setting은 고객의 연속적인 작업을 해결하기 위해 많은 작업을 학습해야 하는 클라우드 서비스와 같은 환경이라는 것입니다.

- 목표는 각 작업에 대해 새로운 모델을 학습하지 않고 모든 작업에서 좋은 성능을 발휘하는 시스템을 구축하는 것입니다.

이를 위해 Compact하고 Extensible한 Downstream Model을 제공하는 Transfer Learning 전략을 제안합니다. 여기서 이제 2가지 모델이 나옵니다.

- Compact는 각 작업에 대해 소수의 추가 매개변수로 많은 작업을 해결할 수 있는 모델을 의미합니다

- Extensible는이전 작업을 잊지 않고 새로운 작업을 점진적으로 학습할 수 있는 모델을 의미합니다.

이제 여기서 일반적으로 사용되는 두 가지 Transfer Learning 기법은 Feature-Based Transfer와 Fine-Tuning입니다.

- Feature-Based Transfer는 실수형 임베딩 벡터를 사전 학습하는 것을 포함하며, 이 임베딩은 단어, 문장, 또는 문단 수준 일 수 있으며. 임베딩은 이후 커스텀 Downstream Model로 입력됩니다.

- Fine-Tuning은 사전 학습된 네트워크의 가중치를 복사하여 다운스트림 작업에서 튜닝하는 방식입니다. 최근 연구에 따르면 Fine-Tuning이 Feature-Based Transfer보다 더 좋은 성능을 보이는 경우가 많습니다.

- 또한 Feature-Based Transfer와 Fine-Tuning 모두 각 작업에 대해 새로운 가중치 세트를 필요로 하가 때문에 새로운 Task에 적응을을 질 하지 못한다는 이슈가 있습니다.

그래서 Transfer Learning 기법 대신, Adapter Modules에 기반한 대안적인 Transfer Method를 제안합니다.

- Fine-Tuning은 네트워크의 하위 레이어를 작업 간에 공유할 경우 더 효율적이지만, 우리가 제안하는 Adapter Tuning 방법은 훨씬 더 Parameter Efficient합니다. Adapter-Based Tuning은 Fine-Tuning에 비해 작업당 필요한 매개변수가 훨씬 적으면서도 유사한 성능을 달성합니다.

Adapters는 사전 학습된 네트워크의 레이어 사이에 추가되는 새로운 모듈입니다.

Adapter-Based Tuning은 Feature-Based Transfer와 Fine-Tuning과 다음과 같은 차이점에 데하여 설명해보면. 파라미터 w를 가진 함수 ϕw(x) 가 있다고 할 때

- Feature-Based Transfer는 ϕw에 새로운 함수 χv를 결합하여 χv(ϕw(x))를 생성하고, 작업별 매개변수 v만 훈련합니다.

- Fine-Tuning은 각 작업에 대해 원래의 파라미터 w를 조정하여 Compactness를 제한합니다.

Adapter-Based Tuning 개념을 설명해보면

- 사전 학습된 파라미터 w를 고정하고, 새로운 작업별 추가 파라미터 v를 학습합니다.

- 새로운 함수 ψw,v(x)를 정의하여, 초기 파라미터 v0가 ψw,v0(x) ≈ ϕw(x)가 되도록 설정합니다.

- 훈련 중에는 작업별 추가 파라미터 v만 조정, |v| ≪ |w|를 만족해 효율적이고 Compact한 모델 설계 가능합니다.

- 기존 작업에 영향을 주지 않고 새로운 작업에 확장이 가능합니다.

기존의 학습 방식과 비교해보자면

Multi-Task Learning도 Compact 모델은 모든 작업에 대한 동시 접근을 필요로 하지만 Adapter-Based Tuning은 필요하지 않습니다.

Continual Learning 시스템은 작업 스트림 학습이 가능하나, 이전 작업을 잊는 Forgetting Problem이 발생합니다.

Adapter-Based Tuning은

- 작업 간 파라미터 공유를 유지하면서도 독립적으로 학습이 가능합니다.

- 소수의 작업별 파라미터로 이전 작업을 완벽히 기억합니다.

그리고 Adapters가 NLP를 위한 Parameter-Efficient Tuning은 Adapter Module을 설계하고 이를 기본 모델과 통합하는 것이지만, 간단하지만 효과적인 Bottleneck Architecture를 제안합니다.

GLUE Benchmark에서 우리의 전략은 전체 Fine-Tuning된 BERT와 거의 동일한 성능을 기록하면서도 작업당 3%의 작업별 파라미터만을 사용하며, Fine-Tuning은 작업당 100%의 작업별 파라미터를 사용합니다. 추가로 17개의 공개 텍스트 데이터셋과 SQuAD 추출형 질문 응답에서도 유사한 결과를 관찰했습니다.

요약하면, Adapter-Based Tuning은 텍스트 분류에서 최신 성능에 가까운 단일, 확장 가능한 모델을 제공합니다.

Adapter tuning for NLP

이 논문에서는 여러 Downstream Task에 대해 여러 Text Model을 조정할수 있는 Adapter Tuning이라는 전략을 제시합니다.

주요 3가지 특성에 데하여 설명을 드리면

- 성능이 우수함

- 작업을 순차적으로 학습할 수 있음 - 즉, 모든 데이터셋에 동시에 접근할 필요가 없음

- 작업별로 소량의 추가 매개변수만 추가됨

이러한 특성은 특히 여러 모델을 일련의 다운스트림 작업에 대해 학습해야 하는 클라우드 서비스 환경에서 유용하며, 높은 공유도를 제공하여 효율성을 높입니다.

이러한 목표를 달성하기 위해 새로운 병목 어댑터 모듈을 제안합니다.

Adapter Tuning은 모델에 소수의 새로운 매개변수를 추가하고 이를 다운스트림 작업에 대해 학습시키는 방식입니다

전통적인 Fine-Tuning에서는 네트워크의 최상층을 수정하는데, 이는 상위 작업과 하위 작업 간의 레이블 공간 및 손실이 다르기 때문에 필요하고, 새로운 레이어를 원래 네트워크에 삽입합니다. 원래 네트워크의 가중치는 그대로 두고, 새로운 어댑터 레이어만 무작위로 초기화하여 학습하게 됩니다.

이러한 Adapter 모듈에는 두 가지 주요 특징이 있습니다:

- 소수의 매개변수 사용: 어댑터 모듈은 원래 네트워크의 레이어보다 작아야 하며, 작업이 추가될 때 전체 모델 크기가 상대적으로 천천히 증가하게 만듭니다.

- 거의 동일한 초기화: 학습이 안정적이기 위해 어댑터 모듈을 거의 동일 함수로 초기화해야 합니다.

이러한 초기화를 통해 학습이 시작될 때 원래 네트워크에 영향을 주지 않으며, 학습 중 어댑터는 네트워크 전체에 걸쳐 활성화 분포를 변경할 수 있습니다. 어댑터 모듈이 필요하지 않을 경우 무시할 수도 있습니다.

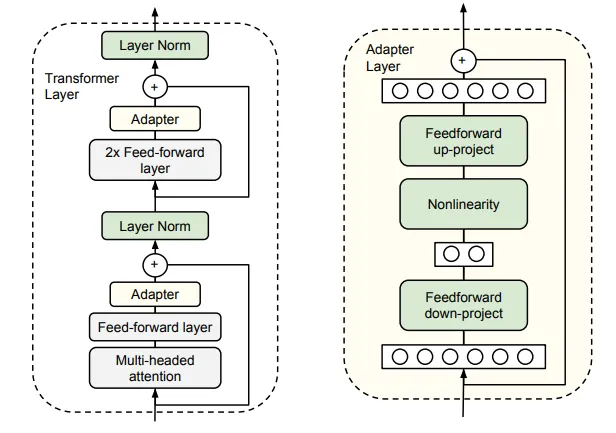

Instantiation for Transformer Networks

Transformer에 어댑터 기반 튜닝을 적용하여 최신 성능을 달성합니다. 어댑터 모듈에는 다양한 설계 옵션이 있지만, 이 논문에서는 단순한 설계가 좋은 성능을 발휘한다는 것을 발견했습니다.

Transformer의 각 레이어는 두 개의 주요 하위 레이어를 포함합니다: Attention Layer와 Feedforward Layer. 각 하위 레이어의 출력은 입력 크기로 다시 투영되며, 이후 Skip Connection이 적용됩니다. 또한, 각 하위 레이어의 출력은 Layer Normalization에 전달됩니다. 저자들은 각 하위 레이어 뒤에 두 개의 직렬 어댑터를 추가했습니다. 어댑터는 하위 레이어의 출력에 직접 적용되며, 입력 크기로 투영한 후 Skip Connection을 적용하기 전 단계에 추가됩니다. 이 어댑터의 출력은 이후 Layer Normalization으로 바로 전달됩니다.

또한, Adapter Module의 Parameter의 수를 제한하기 위해서 앞에서 설명했듯이, 병목 Architecuter를 제안합니다.

𝑤: Pretrained 모델의 학습 파라미터(vector)

𝑣: 새롭게 학습해야할 파라미터(vector)

𝜑_𝑤: 사전학습된 모델(Neural network)

x: 인풋 데이터

Feature-based learning: 𝜒_𝑣(𝜑_𝑤(x))

: 𝜒_𝑣은 단순히 출력만 바꿔주는 final layer라고 생각하면 됩니다. 즉, 기존 사전 학습된 네트워크 𝜑_𝑤의 결과를 새로운 태스크의 출력(𝜒_𝑣)에 맞게 변환한 결과입니다.

Fine-tuning: 𝜑'_𝑤'(x)

사전 학습된 파라미터 자체를 변형, 즉 모델 함수 자체를 변형합니다. - 𝜑_𝑤(x) -> 𝜑'_𝑤'(x)

Adapter: 𝜓_{𝑤,𝑣}

𝑤는 고정하되, 새로운 태스크에 대한 가중치 𝑣만 업데이트합니다.

d 차원의 특징을 더 작은 차원 m으로 투영한 후, 비선형성을 적용하고 다시 d 차원으로 투영합니다.

각 레이어당 추가되는 총 파라미터 수는 2md + d + m입니다. m < d로 설정함으로써 작업당 추가되는 파라미터 수를 줄일 수 있으며, 실제로 원래 모델 파라미터의 약 0.5-8%만 사용합니다.

병목 차원 m을 조절함으로써 성능과 파라미터 효율성을 쉽게 조정할 수 있습니다. 어댑터 모듈 자체에는 내부적으로 Skip Connection이 있어, 투영 레이어의 파라미터가 거의 0으로 초기화될 경우 어댑터 모듈은 대략적인 동일 함수 로 초기화됩니다.

또한, 작업마다 새로운 Layer Normalization 파라미터도 학습합니다. 이 방법은 Conditional Batch Normalization, FiLM, Self-Modulation과 유사하며, 레이어당 2d 파라미터만으로 네트워크를 효율적으로 적응시킵니다. 그러나 Layer Normalization 파라미터만 학습하는 것으로는 충분한 성능을 얻을 수 없다고 설명합니다.

Experiments

Adapter 기반 튜닝이 텍스트 작업에서 파라미터 효율적인 전이를 달성함을 보여줍니다. GLUE 벤치마크에서 BERT를 완전히 파인튜닝한 성능에 비해 어댑터 튜닝은 성능 차이가 0.4%에 불과하지만, 파인튜닝보다 약 3%의 파라미터만 추가됩니다. 이 결과는 추가적인 17개의 공개 분류 작업 및 SQuAD 질문 응답에서도 확인되었습니다. 분석 결과, 어댑터 기반 튜닝은 자동으로 네트워크의 상위 레이어에 집중하는 경향을 보였습니다.

Experimental Setting

- 기본 모델: 사전 학습된 BERT Transformer 네트워크.

- 분류 작업: Devlin et al. (2018)의 방식 적용. 특별한 "[CLS]" 토큰과 선형 레이어를 사용하여 클래스 예측 수행.

- 훈련 과정: Adam 옵티마이저와 워밍업 학습률 스케줄 사용, 배치 크기는 32. Google Cloud TPU 4대를 활용해 훈련.

- 하이퍼파라미터 튜닝: 각 데이터셋에 대해 검증 세트를 기반으로 최적 모델을 선택.

주요 목표: 파라미터를 최소로 추가(이상적으로는 모델 파라미터의 1배)하여 파인튜닝과 유사한 성능을 달성.

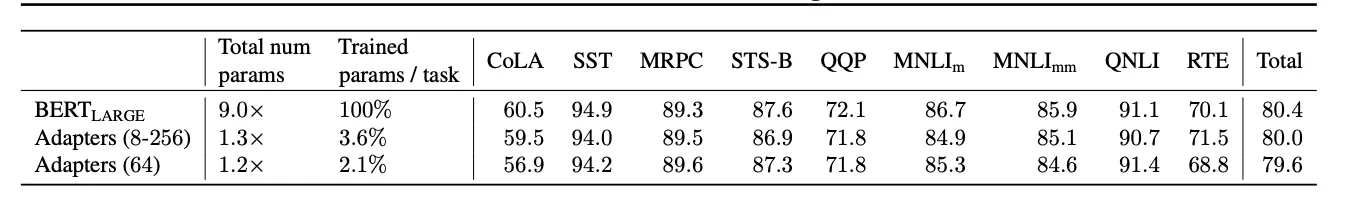

Glue Benchmark

- 사용 모델: BERTLARGE (24개 레이어, 3억 3천만 개의 파라미터).

- 어댑터 튜닝: 어댑터 레이어 추가 후 일부 파라미터만 학습:

- 하이퍼파라미터: 학습률 3×10−5,3×10−4,3×10−3, 에포크 수 3,20, 어댑터 크기 8,64,256.3,20{3, 20}

- 8,64,256{8, 64, 256}

- 3×10−5,3×10−4,3×10−3{3 × 10⁻⁵, 3 × 10⁻⁴, 3 × 10⁻³}

- 안정성을 위해 무작위 시드로 5회 반복 훈련.

- 성능:

- 어댑터 튜닝: GLUE 평균 점수 80.0.

- 전체 파인튜닝: 평균 점수 80.4 (0.4% 더 높음).

- 어댑터 크기를 64로 고정했을 때 평균 점수는 79.6으로 약간 감소.

- 파라미터 효율성:

- 전체 파인튜닝: BERT 파라미터의 9배 필요.

- 어댑터 튜닝: 1.3배 파라미터만 요구.

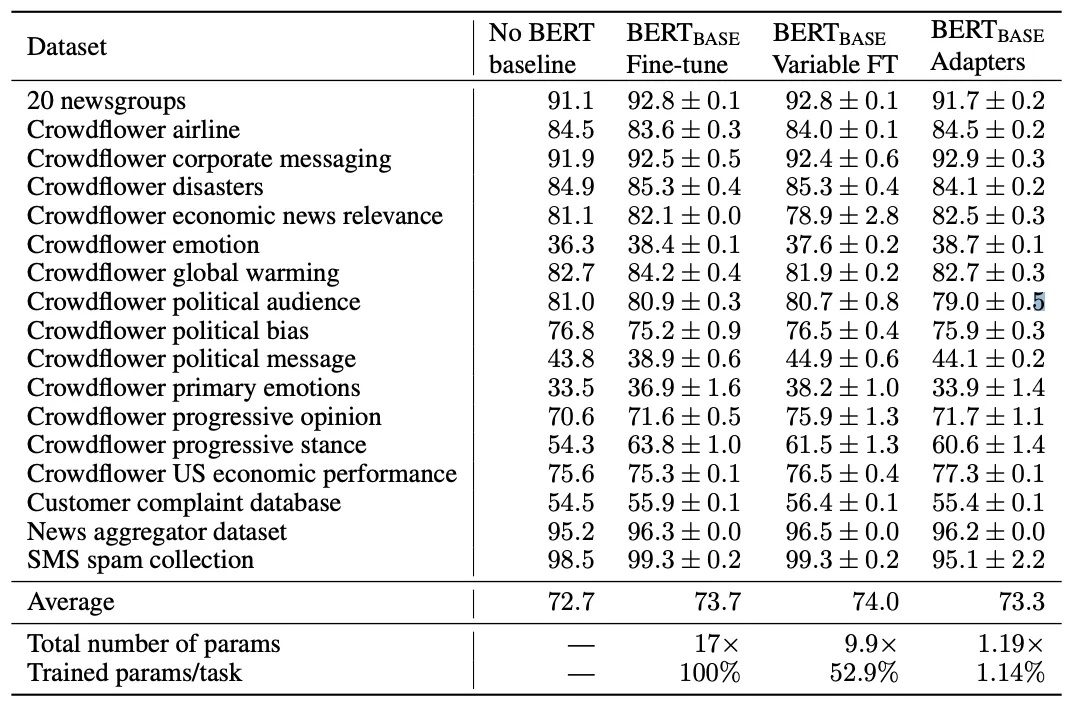

Additional Classification Tasks

- 데이터셋: 900~33만개의 학습 예제, 2157 클래스, 텍스트 길이 57~1,900자.

- 평가 방법:

- 전체 파인튜닝.

- 가변 파인튜닝(상위 n개 레이어만 튜닝).

- 어댑터 튜닝.

- 결과:

- 어댑터 튜닝은 전체 파인튜닝과 거의 동일한 성능(0.4% 차이)으로, 파라미터 효율성이 훨씬 뛰어남.

- 파라미터 비교:

- 전체 파인튜닝: BERTBASE 파라미터의 17배.

- 가변 파인튜닝: 평균 9.9배.

- 어댑터 튜닝: 모든 작업에서 1.19배 파라미터만 사용.

Parameter/Performance Trade-off

어댑터 크기는 파라미터 효율성을 조절하며, 작은 어댑터는 파라미터 수를 줄이지만 성능 저하가 발생할 수 있습니다. 이를 탐색하기 위해 다양한 어댑터 크기를 실험하고 두 가지 기준과 비교했습니다.

- (i) BERTBASE의 상위 k 레이어만 파인튜닝.

- (ii) 레이어 정규화 파라미터만 튜닝.

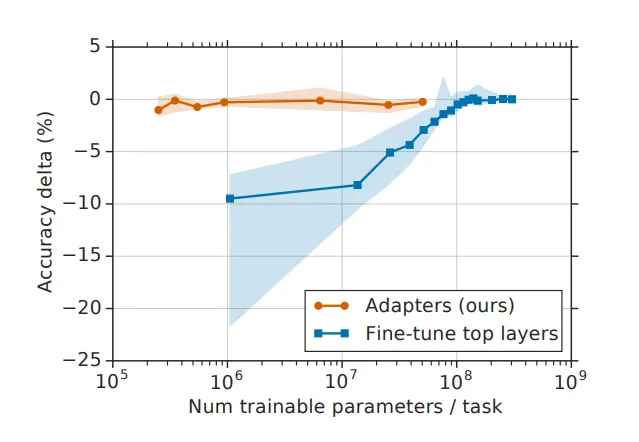

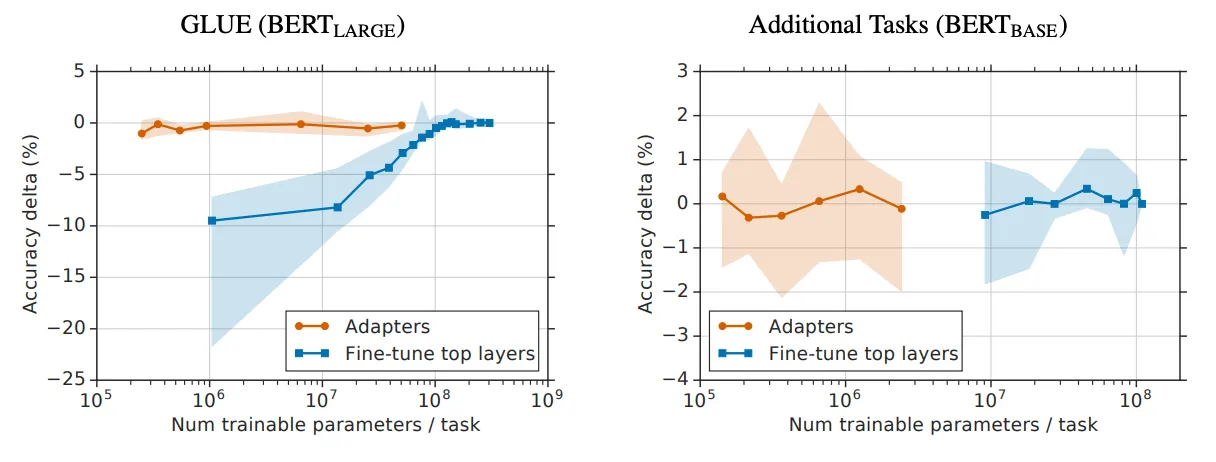

Figure 3에서는 GLUE와 추가 분류 작업 전체에서 파라미터 효율성과 성능의 Trade-off를 보여줍니다. GLUE에서는 적은 레이어를 파인튜닝할 때 성능이 크게 감소하는 반면, 일부 추가 작업에서는 적은 레이어 학습이 유리하여 성능 감소가 적습니다. 두 경우 모두, 어댑터는 파인튜닝보다 훨씬 적은 파라미터로도 우수한 성능을 유지했습니다.

Figure 4에서는 두 GLUE 작업(MNLIm과 CoLA)에 대한 자세한 결과를 보여줍니다. 상위 레이어를 튜닝하면 모든 k > 2에 대해 더 많은 작업별 파라미터가 학습됩니다. 유사한 수의 작업별 파라미터로 파인튜닝할 때 어댑터보다 성능이 크게 떨어집니다.

예를 들어, 상위 레이어 하나만 파인튜닝할 경우 약 900만 개의 학습 가능한 파라미터와 MNLIm에서 77.8% ± 0.1%의 검증 정확도를 달성합니다. 반면, 크기 64의 어댑터 튜닝은 약 200만 개의 학습 가능한 파라미터로 83.7% ± 0.1%의 검증 정확도를 달성합니다. 완전 파인튜닝은 MNLIm에서 84.4% ± 0.02%의 성능을 보입니다. CoLA에서도 유사한 경향이 나타났습니다.

또한, 레이어 정규화 파라미터만 튜닝하여 비교했습니다. 이 레이어는 점별 추가 및 곱셈만 포함하여 4만 개의 학습 가능한 파라미터를 도입하지만, 성능이 CoLA에서 약 3.5%, MNLIm에서 약 4% 감소하여 성능이 좋지 않았습니다.

결론적으로, 어댑터 튜닝은 매우 파라미터 효율적이며, 0.5-5%의 파라미터로도 원본 모델의 크기에 비해 성능 저하가 거의 없고, BERTLARGE의 성능에 근접한 결과를 얻었습니다.

SQuAD Extractive Question Answering

마지막으로, Adapter가 분류 외의 작업에도 효과가 있음을 확인하기 위해 SQuAD v1.1 데이터셋에서 실험을 수행했습니다. 이 작업은 질문과 위키피디아 문단을 주어 문단에서 질문에 대한 답변을 선택하는 것입니다.

Figure 5는 SQuAD 검증 셋에서 Fine-tuning과 Adapter의 파라미터/성능 간 trade-off를 보여줍니다. Fine-tuning의 경우 학습된 레이어 수, 학습률 {3·10⁻⁵, 5·10⁻⁵, 1·10⁻⁴}, 에포크 수 {2, 3, 5}를 조정하였고, Adapter의 경우 Adapter 크기, 학습률 {3·10⁻⁵, 1·10⁻⁴, 3·10⁻⁴, 1·10⁻³}, 에포크 수 {3, 10, 20}을 조정했습니다.

분류 작업과 마찬가지로, Adapter는 훨씬 적은 수의 파라미터를 학습하면서도 Fine-tuning과 유사한 성능을 달성했습니다. 크기가 64인 Adapter(2%의 파라미터)는 최고 F1 점수 90.4%를 달성했고, Fine-tuning은 90.7%를 달성했습니다. 크기가 매우 작은 Adapter(크기 2, 0.1%의 파라미터)조차 F1 점수 89.9%를 기록했습니다.

Analysis and Discussion

1. Adapter의 중요성과 역할

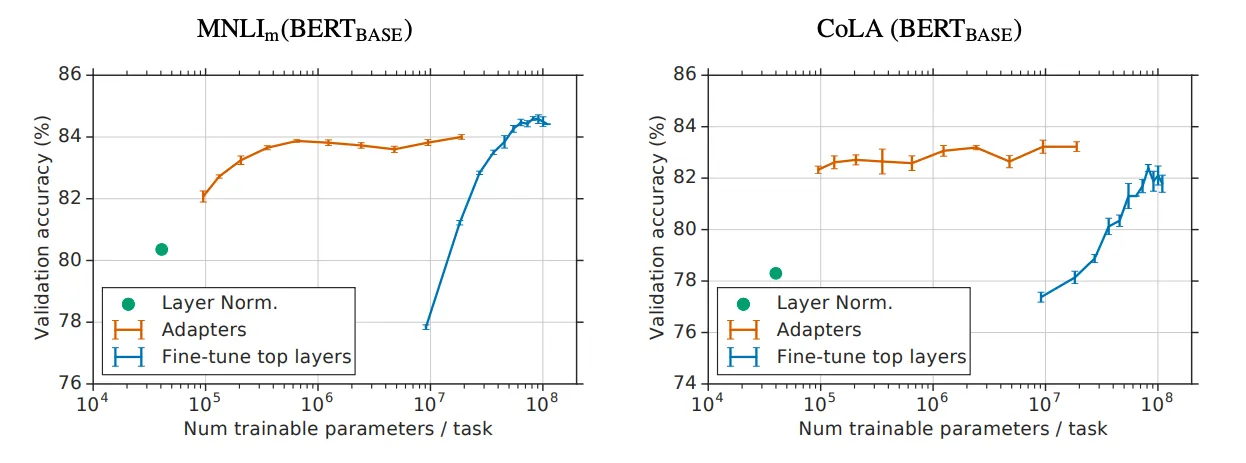

- 개별 Adapter 제거 실험

- 일부 학습된 Adapter를 제거하고 재학습 없이 모델을 평가한 결과, 단일 레이어의 Adapter 제거는 성능에 미치는 영향이 미미했으며, 최대 성능 저하는 2%에 불과했습니다.

- 모든 Adapter를 제거했을 때: MNLI에서 37%, CoLA에서 69%의 성능 저하 발생. 이는 Adapter가 개별적으로는 작은 영향을 미치지만, 전체 네트워크에 중요한 역할을 한다는 것을 보여줍니다.

- 상위 레이어의 중요성

- 하위 레이어(0~4 레이어)의 Adapter를 제거해도 성능에 거의 영향을 미치지 않음.

- 상위 레이어 Adapter가 더 큰 영향을 미침. 이는 상위 레이어가 작업별 고유 특징을 학습하며, Adapter가 상위 레이어에 우선적으로 작동한다는 점에서 Fine-tuning 전략과 유사.

2. Adapter 초기화와 크기의 강건성

- 초기화 크기 실험

- Adapter 모듈의 가중치는 표준편차 10⁻² 이하에서 성능이 안정적이었음.

- 초기화 크기가 너무 크면(CoLA에서 더 뚜렷하게) 성능이 저하됨.

- 초기화 표준편차 범위 [10⁻⁷, 1] 내에서 10⁻² 이하를 권장.

- Adapter 크기별 성능

- 다양한 크기(8, 64, 256)의 Adapter로 실험한 결과, 크기 8~256 사이에서 성능 차이는 거의 없음.

- MNLI 평균 검증 정확도:

- 크기 8: 86.2%

- 크기 64: 85.8%

- 크기 256: 85.7%

3. Adapter 아키텍처 확장 시도

- 확장 실험: 다양한 변형 아키텍처를 실험했지만, 성능 향상은 미미했음.

- 시도한 확장 방식:

- Batch/Layer Normalization 추가.

- Adapter의 레이어 수 증가.

- tanh 등 다른 활성화 함수 적용.

- Attention 레이어 내부에만 Adapter 삽입.

- 주요 레이어에 병렬로 Adapter 추가 및 곱셈 상호작용 도입.

- 결과: 제안된 기본 Adapter 구조와 유사한 성능.

- 시도한 확장 방식:

Related Work

사전 학습된 텍스트 표현

- 사전 학습된 텍스트 표현은 NLP 작업 성능 향상을 위해 사용되며, 주로 대규모 비지도 학습 데이터를 기반으로 합니다. 이후 작업에서 fine-tuning을 통해 최적화됩니다.

- 단어 임베딩 발전: Brown 클러스터와 같은 초기 기법에서 시작하여 Word2Vec, GloVe, FastText 등 딥러닝 기반 접근법으로 발전(Mikolov et al., 2013; Pennington et al., 2014). 긴 텍스트 임베딩 기술도 Le & Mikolov(2014) 등의 연구로 개발되었습니다.

- 문맥 포함: ELMo, BiLSTM 등은 문맥 정보를 반영하며, 어댑터는 이러한 모델처럼 내부 계층을 활용하지만, 네트워크 전체에서 피처를 재구성하는 것이 특징.

Pre-trained text representations

- 사전 학습된 모델 전체를 작업에 맞게 fine-tuning하며, 새로운 작업마다 네트워크 가중치 세트가 필요.

- 장점: task별 모델 설계가 필요 없으며, Masked Language Model(MLM)을 활용한 Transformer 기반 네트워크(Vaswani et al., 2017)가 질문 답변, 텍스트 분류 등의 작업에서 최첨단 성능 달성(Devlin et al., 2018).

Multi-task Learning (MTL)

- 하위 계층은 공유, 상위 계층은 작업별 특화 구조를 사용.

- 여러 작업을 동시에 학습하며, 작업 간 규칙성을 활용해 성능을 향상(Caruana, 1997).

- 활용 사례: 품사 태깅, 개체명 인식, 기계 번역(Johnson et al., 2017), 질문 답변(Choi et al., 2017) 등.

- 제한: 훈련 중 작업들에 동시 접근이 필요하며, 이는 Adapter와 차별점.

Continual Learning

- 작업 시퀀스에서 학습하며, 새로운 작업을 학습할 때 이전 작업을 잊는 "망각(catastrophic forgetting)" 문제를 해결하려는 접근.

- 방법: 그러나 작업 수가 많아질수록 비효율적. Adapter는 이보다 효율적으로 확장 가능.

- Progressive Networks는 작업마다 새로운 네트워크를 생성해 망각 방지(Rusu et al., 2016).

Transfer Learning in Vision

- ImageNet 사전 학습 모델: Fine-tuning은 분류, 검출, 세그먼트 등의 비전 작업에서 최첨단 성능 달성(Kornblith et al., 2018).

- Convolutional Adapter:Adapter 크기를 줄여도 성능 유지, 모델 크기는 작업당 약 11% 증가.

- 작은 convolutional 계층을 추가해 작업별 학습 수행(Rebuffi et al., 2017).

BERT의 Adapter 연구와 비교

- Stickland & Murray(2019):PALs와 Adapter는 유사하지만, 아키텍처와 접근 방식이 다름.

- Projected Attention Layers(PALs)을 도입해 BERT의 모든 GLUE 작업에서 다중 작업 학습 수행.

- 결론: 다중 작업 학습과 지속 학습에도 강력한 성능과 메모리 효율성을 보여줌.

- Adapter는 작은 크기로 효율적인 확장을 제공하며, 사전 학습 모델과 fine-tuning의 한계를 보완.