정신이 없어서 이어서 쓰는걸 까먹었네요.. 열심히 써보겠습니다 ㅠ

# 현재 디렉토리는 /content이며 이 디렉토리를 기준으로 실습코드와 데이터를 다운로드 합니다.

!pwd

!rm -rf DLCV

!git clone https://github.com/chulminkw/DLCV.git

# DLCV 디렉토리가 Download되고 DLCV 밑에 Detection과 Segmentation 디렉토리가 있는 것을 확인

!ls -lia

!ls -lia DLCVOpenCV Darknet YOLO를 이용하여 image & 영상 Object Detection

- 여기선 YOLO와 tiny-yolo를 이용하여 Object Detection을 해보겠습니다.

import cv2

import matplotlib.pyplot as plt

import os

%matplotlib inline

# 코랩 버전은 default_dir 은 /content/DLCV로 지정하고 os.path.join()으로 상세 파일/디렉토리를 지정합니다.

default_dir = '/content/DLCV'

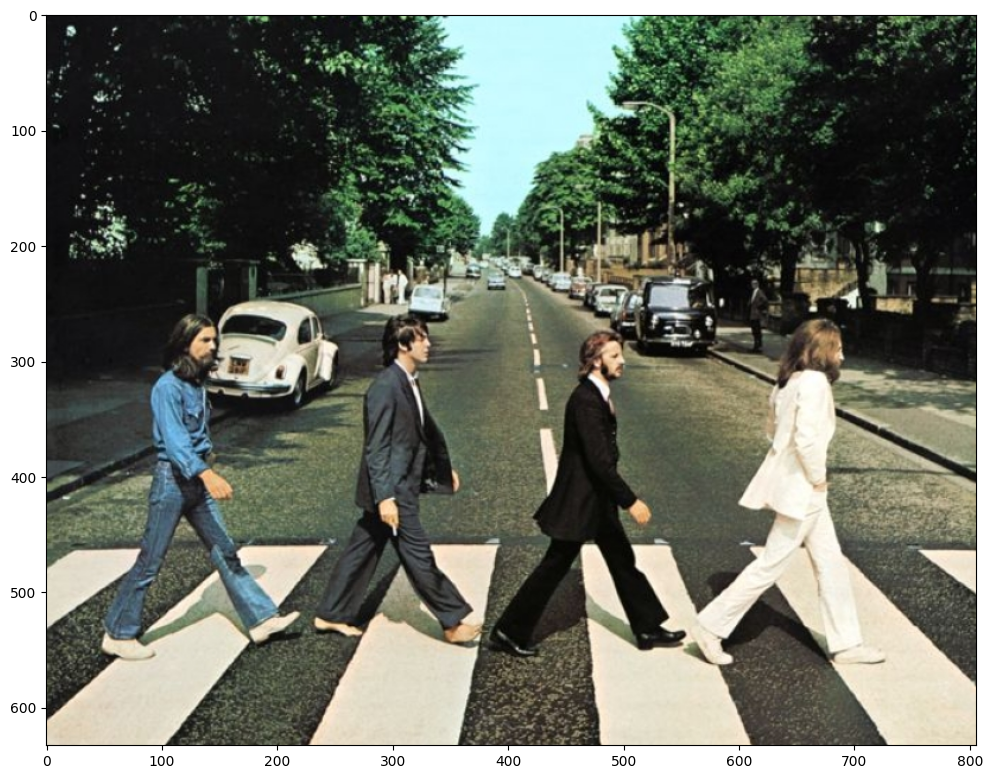

img = cv2.imread(os.path.join(default_dir, 'data/image/beatles01.jpg'))

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

print('image shape:', img.shape)

plt.figure(figsize=(12, 12))

plt.imshow(img_rgb)image shape: (633, 806, 3)

<matplotlib.image.AxesImage at 0x7cb8db20ff10>

데이터셋을 확인후, Darknet Yolo사이트에서 coco로 학습된 Inference모델와 환경파일을 다운로드 받은 후 이를 이용해 OpenCV에서 Inference 모델을 생성해 보겠습니다.

- https://pjreddie.com/darknet/yolo/ 에 다운로드 URL 있음.

- pretrained 모델은 wget https://pjreddie.com/media/files/yolov3.weights 에서 다운로드

- pretrained 모델을 위한 환경 파일은 https://raw.githubusercontent.com/pjreddie/darknet/master/cfg/yolov3.cfg 에서 다운로드

- wget https://github.com/pjreddie/darknet/blob/master/cfg/yolov3.cfg?raw=true -O ./yolov3.cfg

- readNetFromDarknet(config파일, weight파일)로 config파일 인자가 weight파일 인자보다 먼저 옴. 주의 필요.

- tiny yolo의 pretrained된 weight파일은 wget https://pjreddie.com/media/files/yolov3-tiny.weights 에서 download 가능.

- config 파일은 wget https://github.com/pjreddie/darknet/blob/master/cfg/yolov3-tiny.cfg?raw=true -O ./yolov3-tiny.cfg 로 다운로드.

!rm -rf /content/DLCV/Detection/yolo//pretrained

!mkdir /content/DLCV/Detection/yolo/pretrained

# pretrained 디렉토리가 생성되었는지 확인 합니다.

%cd /content/DLCV/Detection/yolo

!ls

### coco 데이터 세트로 pretrained 된 yolo weight 파일과 config 파일 다운로드

%cd /content/DLCV/Detection/yolo/pretrained

!echo "##### downloading pretrained yolo/tiny-yolo weight file and config file"

!wget https://pjreddie.com/media/files/yolov3.weights

!wget https://github.com/pjreddie/darknet/blob/master/cfg/yolov3.cfg?raw=true -O ./yolov3.cfg

!wget https://pjreddie.com/media/files/yolov3-tiny.weights

!wget wget https://github.com/pjreddie/darknet/blob/master/cfg/yolov3-tiny.cfg?raw=true -O ./yolov3-tiny.cfg

!echo "##### check out pretrained yolo files"

!ls /content/DLCV/Detection/yolo/pretrainedreadNetFromDarknet(config weight파일)을 이용하여 yolo inference network 모델로딩

import os

#CUR_DIR = os.path.abspath('.')

# 코랩 버전은 아래 코드 사용

CUR_DIR = '/content/DLCV/Detection/yolo'

weights_path = os.path.join(CUR_DIR, 'pretrained/yolov3.weights')

config_path = os.path.join(CUR_DIR, 'pretrained/yolov3.cfg')

#config 파일 인자가 먼저 옴.

cv_net_yolo = cv2.dnn.readNetFromDarknet(config_path, weights_path)COCO Class id와 Class명 Mapping

labels_to_names_seq는 class id가 0~79까지입니다.

- OpenCV DNN Darknet YOLO Model을 Load합니다.

labels_to_names_seq = {0:'person',1:'bicycle',2:'car',3:'motorbike',4:'aeroplane',5:'bus',6:'train',7:'truck',8:'boat',9:'traffic light',10:'fire hydrant',

11:'stop sign',12:'parking meter',13:'bench',14:'bird',15:'cat',16:'dog',17:'horse',18:'sheep',19:'cow',20:'elephant',

21:'bear',22:'zebra',23:'giraffe',24:'backpack',25:'umbrella',26:'handbag',27:'tie',28:'suitcase',29:'frisbee',30:'skis',

31:'snowboard',32:'sports ball',33:'kite',34:'baseball bat',35:'baseball glove',36:'skateboard',37:'surfboard',38:'tennis racket',39:'bottle',40:'wine glass',

41:'cup',42:'fork',43:'knife',44:'spoon',45:'bowl',46:'banana',47:'apple',48:'sandwich',49:'orange',50:'broccoli',

51:'carrot',52:'hot dog',53:'pizza',54:'donut',55:'cake',56:'chair',57:'sofa',58:'pottedplant',59:'bed',60:'diningtable',

61:'toilet',62:'tvmonitor',63:'laptop',64:'mouse',65:'remote',66:'keyboard',67:'cell phone',68:'microwave',69:'oven',70:'toaster',

71:'sink',72:'refrigerator',73:'book',74:'clock',75:'vase',76:'scissors',77:'teddy bear',78:'hair drier',79:'toothbrush' }labels_to_names = {1:'person',2:'bicycle',3:'car',4:'motorcycle',5:'airplane',6:'bus',7:'train',8:'truck',9:'boat',10:'traffic light',

11:'fire hydrant',12:'street sign',13:'stop sign',14:'parking meter',15:'bench',16:'bird',17:'cat',18:'dog',19:'horse',20:'sheep',

21:'cow',22:'elephant',23:'bear',24:'zebra',25:'giraffe',26:'hat',27:'backpack',28:'umbrella',29:'shoe',30:'eye glasses',

31:'handbag',32:'tie',33:'suitcase',34:'frisbee',35:'skis',36:'snowboard',37:'sports ball',38:'kite',39:'baseball bat',40:'baseball glove',

41:'skateboard',42:'surfboard',43:'tennis racket',44:'bottle',45:'plate',46:'wine glass',47:'cup',48:'fork',49:'knife',50:'spoon',

51:'bowl',52:'banana',53:'apple',54:'sandwich',55:'orange',56:'broccoli',57:'carrot',58:'hot dog',59:'pizza',60:'donut',

61:'cake',62:'chair',63:'couch',64:'potted plant',65:'bed',66:'mirror',67:'dining table',68:'window',69:'desk',70:'toilet',

71:'door',72:'tv',73:'laptop',74:'mouse',75:'remote',76:'keyboard',77:'cell phone',78:'microwave',79:'oven',80:'toaster',

81:'sink',82:'refrigerator',83:'blender',84:'book',85:'clock',86:'vase',87:'scissors',88:'teddy bear',89:'hair drier',90:'toothbrush',

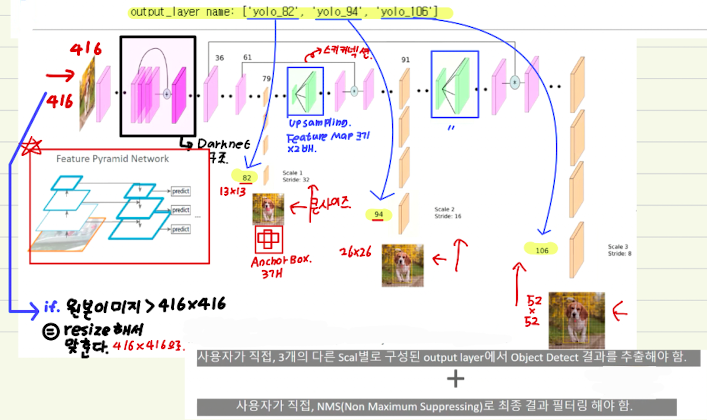

91:'hair brush'}3개의 Scale Output Layer에서 결과 데이터 추출

# YOLO 네트워크에서 모든 레이어 이름을 가져옴

layer_names = cv_net_yolo.getLayerNames()

# 출력 레이어의 인덱스를 기반으로 YOLOv3의 출력 레이어 이름을 추출

outlayer_names = [layer_names[i - 1] for i in cv_net_yolo.getUnconnectedOutLayers()]

# 출력 레이어 이름을 출력 (13x13, 26x26, 52x52 grid에서 탐지된 결과를 제공하는 레이어들)

print('output_layer name:', outlayer_names)

# 로딩된 YOLOv3 모델은 416x416 크기를 입력으로 사용함.

# 이미지를 416x416으로 크기 변경하고 BGR 채널을 RGB로 변환하여 네트워크에 입력하기 위한 blob 생성

cv_net_yolo.setInput(cv2.dnn.blobFromImage(

img, # 원본 이미지

scalefactor=1/255.0, # 픽셀 값을 0~1 범위로 정규화 (255로 나눔)

size=(416, 416), # YOLOv3의 입력 크기 (416x416)

swapRB=True, # BGR 채널을 RGB로 변환

crop=False # 이미지를 자르지 않고 그대로 사용

))

# YOLO 네트워크에서 지정한 출력 레이어들에서 객체 탐지를 수행하고 결과를 반환

cv_outs = cv_net_yolo.forward(outlayer_names)

# 탐지된 객체의 경계 상자(Bounding Box)를 그리기 위한 색상 (녹색)

green_color = (0, 255, 0)

# 경계 상자 위에 표시될 캡션(객체 이름 및 확률)을 위한 글자색 (빨간색)

red_color = (0, 0, 255)

# Result: output_layer name: ['yolo_82', 'yolo_94', 'yolo_106']YOLO 네트워크에서 출력 레이어 이름을 추출

- cv_net_yolo.getLayerNames(): 네트워크의 모든 레이어 이름을 가져옵니다. YOLOv3 모델은 여러 레이어로 구성되어 있고, 각 레이어는 CNN(Convolutional Neural Network) 계층입니다.

- cv_net_yolo.getUnconnectedOutLayers(): 네트워크에서 출력 레이어의 인덱스를 반환합니다. YOLOv3에서는 3개의 출력 레이어(13x13, 26x26, 52x52 grid)가 존재하며, 이 레이어들에서 객체 탐지를 수행합니다.

- outlayer_names: YOLO 모델에서 출력 레이어들만 추출한 리스트입니다. 탐지 결과를 이 레이어들에서 얻습니다.

YOLOv3 모델에 입력 이미지 설정

cv2.dnn.blobFromImage: 이미지를 네트워크에 입력할 수 있는 형식(blob)으로 변환하는 함수입니다. 이 과정에서 여러 설정이 이루어집니다:

- scalefactor=1/255.0: 이미지의 픽셀 값을 255로 나누어 0~1 범위로 정규화합니다. 이 과정을 통해 YOLO 모델이 학습한 데이터와 입력값을 맞춥니다.

- size=(416, 416): 입력 이미지의 크기를 416x416 픽셀로 리사이즈합니다. YOLOv3는 416x416 크기의 이미지를 학습했기 때문에, 입력 크기를 맞추어야 합니다.

- swapRB=True: OpenCV는 기본적으로 BGR 형식의 이미지를 사용하지만, YOLO는 RGB 형식을 사용합니다. 따라서 BGR에서 RGB로 채널을 변환합니다.

- crop=False: 이미지를 자르지 않고 그대로 사용합니다.

Object Detection 수행

- cv_net_yolo.forward(outlayer_names): YOLO 네트워크의 출력 레이어에서 객체 탐지 결과를 얻습니다. outlayer_names는 앞에서 구한 출력 레이어들이며, 여기서 각 객체의 위치, 크기, 그리고 객체 분류에 대한 정보를 추출합니다.

- cv_outs: YOLOv3가 추출한 객체 탐지 결과가 저장됩니다.

output_layer name: ['yolo_82', 'yolo_94', 'yolo_106']

3개의 Scale Output Layer에서 Object Detection 정보를 모두 수집

Center, Width, Height 좌표는 모두 좌상단, 우하단 좌표로 변경합니다.

import numpy as np

# 원본 이미지를 YOLO 네트워크에 입력할 때는 (416, 416)으로 크기를 조정함.

# 네트워크가 예측한 bounding box 좌표는 이 크기 기준으로 계산되므로, 원본 이미지 크기와 맞추기 위해 원본 이미지의 shape 정보 필요.

rows = img.shape[0] # 원본 이미지의 높이

cols = img.shape[1] # 원본 이미지의 너비

# Confidence(신뢰도)와 NMS(Non-Maximum Suppression) 임계값 설정

conf_threshold = 0.5 # confidence threshold (탐지된 객체의 신뢰도 최소값)

nms_threshold = 0.4 # Non-Maximum Suppression threshold (중복된 박스를 제거하기 위한 임계값)

# 탐지된 객체에 대한 정보를 저장할 리스트

class_ids = [] # 클래스 ID를 저장하는 리스트

confidences = [] # 신뢰도를 저장하는 리스트

boxes = [] # bounding box 좌표를 저장하는 리스트

# YOLO 네트워크에서 반환된 3개의 output layer에 대해 탐지된 객체 정보를 추출하고 시각화

for ix, output in enumerate(cv_outs):

print('output shape:', output.shape) # 각 output layer의 shape 출력 (탐지된 객체의 수와 속성 크기)

# 각 output layer에서 탐지된 객체별로 정보를 추출

for jx, detection in enumerate(output):

# detection 배열의 6번째 요소부터는 클래스별 점수(class score)들이 저장되어 있음 (앞의 5개는 bounding box 정보)

scores = detection[5:]

# 가장 높은 점수를 가진 인덱스(class ID)를 찾음

class_id = np.argmax(scores)

# 해당 클래스의 confidence(신뢰도) 값을 추출

confidence = scores[class_id]

# confidence가 conf_threshold보다 높은 객체만 처리 (탐지 신뢰도가 낮은 객체는 무시)

if confidence > conf_threshold:

print('ix:', ix, 'jx:', jx, 'class_id', class_id, 'confidence:', confidence)

# detection 배열의 앞부분은 중심 좌표(x, y)와 너비(width), 높이(height)를 포함함

# YOLO는 좌상단이나 우하단 좌표가 아니라 중심 좌표와 크기를 반환함

# 이 값을 원본 이미지에 맞게 비율로 변환하여 좌상단과 우하단 좌표를 계산

center_x = int(detection[0] * cols) # 탐지된 객체의 중심 x 좌표 (원본 이미지 크기에 맞게 조정)

center_y = int(detection[1] * rows) # 탐지된 객체의 중심 y 좌표 (원본 이미지 크기에 맞게 조정)

width = int(detection[2] * cols) # 탐지된 객체의 너비 (원본 이미지 크기에 맞게 조정)

height = int(detection[3] * rows) # 탐지된 객체의 높이 (원본 이미지 크기에 맞게 조정)

# 좌상단 좌표 계산 (중심 좌표에서 너비와 높이의 절반을 빼서 구함)

left = int(center_x - width / 2)

top = int(center_y - height / 2)

# 탐지된 객체의 class ID, confidence, bounding box 좌표 정보를 저장

class_ids.append(class_id) # 탐지된 객체의 클래스 ID 저장

confidences.append(float(confidence)) # 탐지된 객체의 신뢰도 저장

boxes.append([left, top, width, height]) # 탐지된 객체의 bounding box 좌표 저장

output shape: (507, 85)

ix: 0 jx: 316 class_id 0 confidence: 0.8499539

ix: 0 jx: 319 class_id 0 confidence: 0.9317015

ix: 0 jx: 325 class_id 0 confidence: 0.7301026

ix: 0 jx: 328 class_id 0 confidence: 0.9623244

ix: 0 jx: 334 class_id 0 confidence: 0.9984485

ix: 0 jx: 337 class_id 0 confidence: 0.9833524

ix: 0 jx: 343 class_id 0 confidence: 0.9978433

ix: 0 jx: 346 class_id 0 confidence: 0.63752526

output shape: (2028, 85)

ix: 1 jx: 831 class_id 2 confidence: 0.81699777

ix: 1 jx: 832 class_id 2 confidence: 0.7153828

ix: 1 jx: 877 class_id 2 confidence: 0.78542227

ix: 1 jx: 955 class_id 2 confidence: 0.8472696

ix: 1 jx: 1199 class_id 0 confidence: 0.7259762

ix: 1 jx: 1202 class_id 0 confidence: 0.9635838

ix: 1 jx: 1259 class_id 0 confidence: 0.97018635

ix: 1 jx: 1262 class_id 0 confidence: 0.9877816

ix: 1 jx: 1277 class_id 0 confidence: 0.9924558

ix: 1 jx: 1280 class_id 0 confidence: 0.99840033

ix: 1 jx: 1295 class_id 0 confidence: 0.6916557

ix: 1 jx: 1313 class_id 0 confidence: 0.9205802

ix: 1 jx: 1337 class_id 0 confidence: 0.5387825

ix: 1 jx: 1340 class_id 0 confidence: 0.6424068

ix: 1 jx: 1373 class_id 0 confidence: 0.57844293

ix: 1 jx: 1391 class_id 0 confidence: 0.89902174

output shape: (8112, 85)

ix: 2 jx: 2883 class_id 2 confidence: 0.9077373

ix: 2 jx: 2886 class_id 2 confidence: 0.63324577

ix: 2 jx: 2892 class_id 2 confidence: 0.6575013

ix: 2 jx: 3039 class_id 2 confidence: 0.8036038

ix: 2 jx: 3048 class_id 2 confidence: 0.94120145

ix: 2 jx: 3051 class_id 2 confidence: 0.61540514

ix: 2 jx: 3184 class_id 2 confidence: 0.95041007

ix: 2 jx: 3185 class_id 2 confidence: 0.8492525

ix: 2 jx: 3214 class_id 2 confidence: 0.9064125

ix: 2 jx: 3373 class_id 2 confidence: 0.68997973

ix: 2 jx: 3394 class_id 0 confidence: 0.7640699

- scores = detection[5:]: detection 배열에서 첫 5개 요소는 bounding box 정보이고, 그 이후가 클래스별 점수입니다.

- CoCO Dataset으로 Pre-Trained된 Model에서 BoundingBox 정보를 추출시

- Bounding Box 정보를 4개의 Box 좌표, 1개의 Object Score, 그리고 80개의 Class score(Coco는 80개의 Object Category임)로 구성된 총 85개의 정보 구성에서 정보 추출이 필요합니다.

- Class id와 class score는 이 80개 vector에서 가장 높은 값을 가지는 위치 인덱스와 그 값입니다.

NMS를 이용하여 각 Output layer에서 Detected된 Object의 겹치는 Bounding box 제외

NMS Filtering 이라고도 합니다.

conf_threshold = 0.5

nms_threshold = 0.4

idxs = cv2.dnn.NMSBoxes(boxes, confidences, conf_threshold, nms_threshold)

print(idxs)

# Result: [ 4 17 6 15 30 28 24 32 11 8 34 33 25 29]- NMSBoxes는 앞에서 구한 list boxes 입니다.

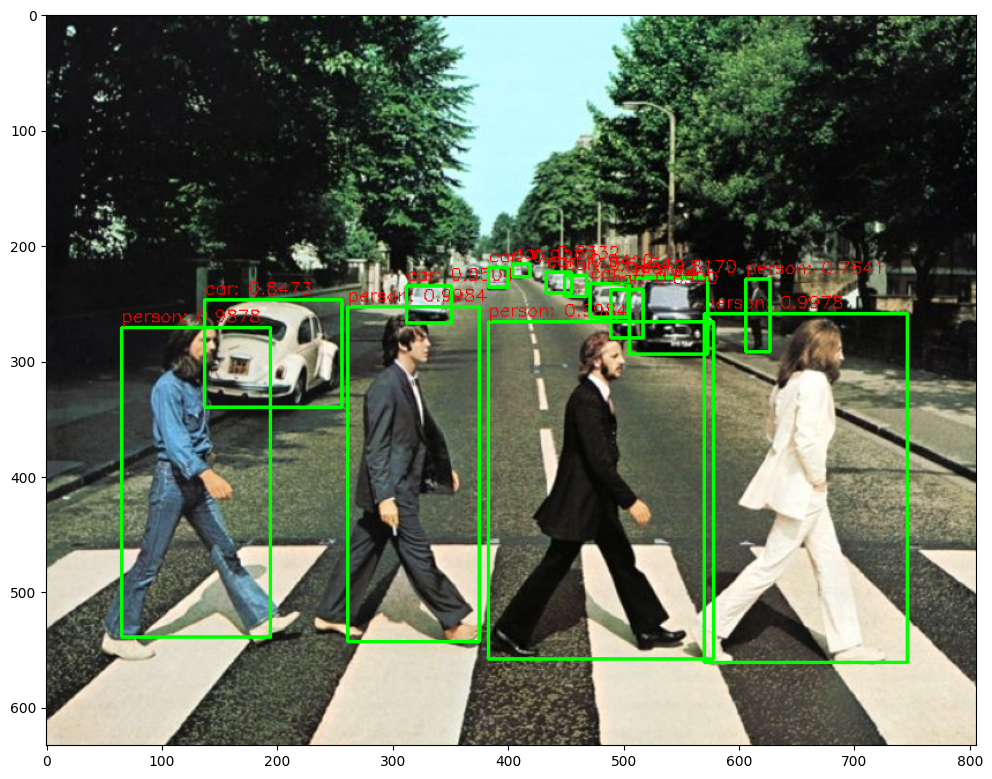

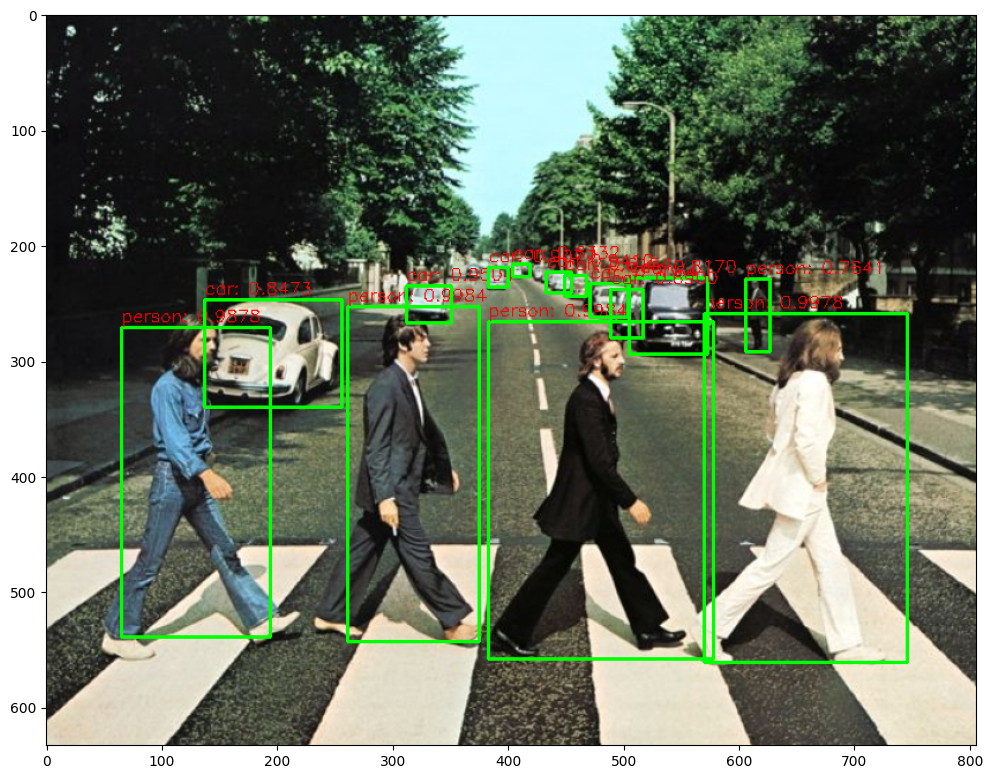

NMS로 최종 filtering된 idxs를 이용하여 boxes, classes, confidences에서 해당하는 Object정보 추출 & 시각화

# 원본 이미지를 복사하여 사각형을 그릴 이미지 배열 생성

draw_img = img.copy()

# NMS로 필터링된 객체가 있을 경우에만 처리

if len(idxs) > 0:

# 필터링된 객체들의 인덱스를 하나씩 처리

for i in idxs.flatten():

box = boxes[i] # bounding box 좌표 추출

left = box[0] # 좌상단 x 좌표

top = box[1] # 좌상단 y 좌표

width = box[2] # 박스의 너비

height = box[3] # 박스의 높이

# 클래스명과 신뢰도를 텍스트로 생성

caption = "{}: {:.4f}".format(labels_to_names_seq[class_ids[i]], confidences[i])

# bounding box 그리기

cv2.rectangle(draw_img, (int(left), int(top)), (int(left + width), int(top + height)), color=green_color, thickness=2)

# 클래스명과 신뢰도 텍스트 그리기

cv2.putText(draw_img, caption, (int(left), int(top - 5)), cv2.FONT_HERSHEY_SIMPLEX, 0.5, red_color, 1)

# 클래스명과 신뢰도를 콘솔에 출력

print(caption)

# BGR 이미지를 RGB로 변환하여 시각화

img_rgb = cv2.cvtColor(draw_img, cv2.COLOR_BGR2RGB)

# 이미지 크기와 시각화

plt.figure(figsize=(12, 12))

plt.imshow(img_rgb)

person: 0.9984

person: 0.9984

person: 0.9978

person: 0.9878

car: 0.9504

car: 0.9412

car: 0.9077

car: 0.9064

car: 0.8473

car: 0.8170

person: 0.7641

car: 0.6900

car: 0.6332

car: 0.6154

<matplotlib.image.AxesImage at 0x7c3250be2f20>

이미지 복사 및 초기화

- draw_img = img.copy()는 원본 이미지를 복사하여 새로운 배열을 생성합니다. OpenCV의 rectangle() 함수는 인자로 넘겨준 이미지에 바로 사각형을 그리기 때문에, 원본 이미지를 보존하려고 복사본을 만듭니다.

NMS 필터링된 객체 처리

- Non-Maximum Suppression(NMS)은 여러 객체 탐지 결과 중에서 가장 신뢰도가 높은 것을 선택하고, 다른 중복된 bounding box를 제거하는 기법입니다. NMS 결과로 idxs 리스트에는 필터링된 객체들의 인덱스만 남습니다.

- if len(idxs) > 0:는 탐지된 객체가 있는 경우에만 처리하도록 합니다.

Bounding Box와 클래스명, 신뢰도 표시

- for i in idxs.flatten():는 필터링된 인덱스를 하나씩 반복 처리합니다. flatten() 함수는 2차원 배열을 1차원 배열로 변환하여 반복할 수 있도록 도와줍니다.

- boxes[i]를 통해 탐지된 객체의 bounding box 좌표를 가져오고, 이 좌표는 좌상단 x, 좌상단 y, 너비, 높이를 포함합니다.

- caption은 탐지된 객체의 클래스명과 신뢰도를 포함한 문자열로, 이를 이미지에 표시하기 위해 사용합니다.

Bounding Box 그리기

- cv2.rectangle() 함수는 이미지 위에 사각형을 그리는 함수입니다. 좌상단 좌표와 우하단 좌표를 입력으로 받아 경계 상자를 그립니다. 경계 상자의 색상은 green_color로 설정되어 있고, 두께는 2입니다.

텍스트(클래스명, 신뢰도)표시

- cv2.putText() 함수는 이미지에 텍스트를 삽입합니다. 텍스트는 객체의 클래스명과 신뢰도를 포함하고 있으며, 텍스트 위치는 bounding box의 좌상단 좌표 위에 표시됩니다. 글꼴은 OpenCV의 FONT_HERSHEY_SIMPLEX가 사용되고, 글자 크기와 색상이 설정됩니다.

BGR에서 RGB로 변환 및 시각화

- OpenCV는 BGR 형식의 이미지를 사용하지만, matplotlib에서는 RGB 형식의 이미지를 사용합니다. 따라서 cv2.cvtColor()를 사용하여 BGR 이미지를 RGB로 변환한 후, plt.imshow()를 통해 이미지를 화면에 표시합니다.

이미지 크기 조정 및 출력

- plt.figure(figsize=(12, 12))는 출력될 이미지의 크기를 설정하며, 12x12인치로 설정합니다. plt.imshow(img_rgb)를 사용해 이미지 결과를 시각적으로 표시합니다.

단일 image를 YOLO로 detect하는 get_detected_img 함수 생성

def get_detected_img(cv_net, img_array, conf_threshold, nms_threshold, use_copied_array=True, is_print=True):

# 원본 이미지를 네트웍에 입력시에는 (416, 416)로 resize 함.

# 이후 결과가 출력되면 resize된 이미지 기반으로 bounding box 위치가 예측 되므로 이를 다시 원복하기 위해 원본 이미지 shape정보 필요

rows = img_array.shape[0]

cols = img_array.shape[1]

draw_img = None

if use_copied_array:

draw_img = img_array.copy()

else:

draw_img = img_array

#전체 Darknet layer에서 13x13 grid, 26x26, 52x52 grid에서 detect된 Output layer만 filtering

layer_names = cv_net.getLayerNames()

try:

output_layers = cv_net.getUnconnectedOutLayers()

# 인덱스 리스트와 정수 리스트 모두 처리

outlayer_names = [layer_names[i - 1] if isinstance(i, list) else layer_names[i - 1] for i in output_layers]

except:

# 위의 방법이 실패하면 이 대안 방법을 시도

output_layers = cv_net.getUnconnectedOutLayers().flatten()

outlayer_names = [layer_names[i - 1] for i in output_layers]

# 로딩한 모델은 Yolov3 416 x 416 모델임. 원본 이미지 배열을 사이즈 (416, 416)으로, BGR을 RGB로 변환하여 배열 입력

cv_net.setInput(cv2.dnn.blobFromImage(img_array, scalefactor=1/255.0, size=(416, 416), swapRB=True, crop=False))

start = time.time()

# Object Detection 수행하여 결과를 cvOut으로 반환

cv_outs = cv_net.forward(outlayer_names)

layerOutputs = cv_net.forward(outlayer_names)

# bounding box의 테두리와 caption 글자색 지정

green_color=(0, 255, 0)

red_color=(0, 0, 255)

class_ids = []

confidences = []

boxes = []

# 3개의 개별 output layer별로 Detect된 Object들에 대해서 Detection 정보 추출 및 시각화

for ix, output in enumerate(cv_outs):

# Detected된 Object별 iteration

for jx, detection in enumerate(output):

scores = detection[5:]

class_id = np.argmax(scores)

confidence = scores[class_id]

# confidence가 지정된 conf_threshold보다 작은 값은 제외

if confidence > conf_threshold:

#print('ix:', ix, 'jx:', jx, 'class_id', class_id, 'confidence:', confidence)

# detection은 scale된 좌상단, 우하단 좌표를 반환하는 것이 아니라, detection object의 중심좌표와 너비/높이를 반환

# 원본 이미지에 맞게 scale 적용 및 좌상단, 우하단 좌표 계산

center_x = int(detection[0] * cols)

center_y = int(detection[1] * rows)

width = int(detection[2] * cols)

height = int(detection[3] * rows)

left = int(center_x - width / 2)

top = int(center_y - height / 2)

# 3개의 개별 output layer별로 Detect된 Object들에 대한 class id, confidence, 좌표정보를 모두 수집

class_ids.append(class_id)

confidences.append(float(confidence))

boxes.append([left, top, width, height])

# NMS로 최종 filtering된 idxs를 이용하여 boxes, classes, confidences에서 해당하는 Object정보를 추출하고 시각화.

idxs = cv2.dnn.NMSBoxes(boxes, confidences, conf_threshold, nms_threshold)

if len(idxs) > 0:

for i in idxs.flatten():

box = boxes[i]

left = box[0]

top = box[1]

width = box[2]

height = box[3]

# labels_to_names 딕셔너리로 class_id값을 클래스명으로 변경. opencv에서는 class_id + 1로 매핑해야함.

caption = "{}: {:.4f}".format(labels_to_names_seq[class_ids[i]], confidences[i])

#cv2.rectangle()은 인자로 들어온 draw_img에 사각형을 그림. 위치 인자는 반드시 정수형.

cv2.rectangle(draw_img, (int(left), int(top)), (int(left+width), int(top+height)), color=green_color, thickness=2)

cv2.putText(draw_img, caption, (int(left), int(top - 5)), cv2.FONT_HERSHEY_SIMPLEX, 0.5, red_color, 1)

if is_print:

print('Detection 수행시간:',round(time.time() - start, 2),"초")

return draw_img- 입력 이미지 크기 확인 및 복사본 생성:

- 함수가 입력으로 받는 img_array의 크기 정보를 확인하여 rows와 cols에 저장합니다. 이는 나중에 네트워크가 예측한 bounding box 좌표를 원래 이미지 크기로 변환하는 데 사용됩니다.

- use_copied_array 플래그에 따라 원본 이미지를 그대로 사용할지, 아니면 복사본을 사용할지 결정합니다. 복사본을 사용하는 이유는 원본 이미지를 보존하기 위함입니다.

- 출력 레이어 필터링:

- YOLO 모델의 모든 레이어 이름을 가져오고, getUnconnectedOutLayers() 함수를 사용하여 출력 레이어들만 필터링합니다. YOLOv3는 3개의 출력 레이어를 가지고 있으며, 각 레이어는 다른 크기의 그리드(13x13, 26x26, 52x52)에서 객체를 탐지합니다.

- 이미지 전처리 및 네트워크 입력:

- 이미지를 YOLOv3 모델이 요구하는 크기(416x416)로 리사이즈하고, OpenCV의 기본 BGR 형식에서 YOLO가 요구하는 RGB 형식으로 변환합니다. 이를 cv2.dnn.blobFromImage() 함수를 사용하여 처리한 후, 네트워크에 입력합니다.

- 객체 탐지 수행:

- cv_net.forward() 함수는 네트워크의 출력 레이어에서 객체 탐지 결과를 반환합니다. 결과에는 각 객체의 중심 좌표, 너비, 높이, 신뢰도 및 클래스별 점수 정보가 포함되어 있습니다.

- 탐지 결과 처리:

- 탐지된 객체들의 신뢰도와 클래스 ID를 기반으로 유효한 객체만 필터링합니다. 신뢰도가 지정된 임계값(conf_threshold)보다 높은 객체만 유효한 객체로 간주합니다.

- 각 탐지 결과에서 객체의 중심 좌표와 크기 정보를 원본 이미지 크기로 변환하여 bounding box 좌표를 계산합니다.

- Non-Maximum Suppression (NMS):

- Non-Maximum Suppression(NMS)을 사용하여 중복된 bounding box를 제거합니다. NMS는 여러 bounding box가 겹칠 때, 신뢰도가 가장 높은 박스를 남기고 나머지를 제거하는 기법입니다.

- Bounding Box와 텍스트 시각화:

- NMS로 필터링된 객체들에 대해 bounding box를 그리고, 탐지된 객체의 클래스명과 신뢰도를 이미지에 텍스트로 표시합니다. cv2.rectangle() 함수는 bounding box를 그리는 역할을 하고, cv2.putText()는 텍스트를 이미지에 삽입합니다.

- 탐지 시간 출력 및 결과 반환:

- 탐지에 걸린 시간을 출력하고, bounding box와 클래스명이 그려진 이미지를 반환합니다.

import cv2

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import time

# image 로드

default_dir = '/content/DLCV'

img = cv2.imread(os.path.join(default_dir, 'data/image/beatles01.jpg'))

#coco dataset 클래스명 매핑

import os

#가급적 절대 경로 사용.

#CUR_DIR = os.path.abspath('.')

# 코랩 버전은 아래 코드 사용

CUR_DIR = '/content/DLCV/Detection/yolo'

weights_path = os.path.join(CUR_DIR, 'pretrained/yolov3.weights')

config_path = os.path.join(CUR_DIR, 'pretrained/yolov3.cfg')

# tensorflow inference 모델 로딩

cv_net_yolo = cv2.dnn.readNetFromDarknet(config_path, weights_path)

conf_threshold = 0.5

nms_threshold = 0.4

# Object Detetion 수행 후 시각화

draw_img = get_detected_img(cv_net_yolo, img, conf_threshold=conf_threshold, nms_threshold=nms_threshold, use_copied_array=True, is_print=True)

img_rgb = cv2.cvtColor(draw_img, cv2.COLOR_BGR2RGB)

plt.figure(figsize=(12, 12))

plt.imshow(img_rgb)Detection 수행시간: 4.44 초

<matplotlib.image.AxesImage at 0x7c3250b01c60>

- SSD에 비해선 느리지만 Object Detection 성능은 좋은걸 알 수 있습니다.

Video(영상) Object Detection

def do_detected_video(cv_net, input_path, output_path, conf_threshold, nms_threshold, is_print):

# 입력 비디오 파일을 읽기 위해 VideoCapture 객체 생성

cap = cv2.VideoCapture(input_path)

# 비디오 코덱 설정 (XVID 사용)

codec = cv2.VideoWriter_fourcc(*'XVID')

# 입력 비디오의 크기와 FPS를 가져옴

vid_size = (round(cap.get(cv2.CAP_PROP_FRAME_WIDTH)), round(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)))

vid_fps = cap.get(cv2.CAP_PROP_FPS)

# 출력 비디오 파일을 저장하기 위한 VideoWriter 객체 생성

vid_writer = cv2.VideoWriter(output_path, codec, vid_fps, vid_size)

# 비디오의 총 프레임 수 가져오기

frame_cnt = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

print('총 Frame 갯수:', frame_cnt)

# Bounding box와 텍스트의 색상 설정

green_color = (0, 255, 0) # Bounding box 색상 (녹색)

red_color = (0, 0, 255) # 텍스트 색상 (빨간색)

# 비디오의 모든 프레임을 하나씩 처리

while True:

hasFrame, img_frame = cap.read() # 비디오의 한 프레임을 읽어옴

if not hasFrame:

print('더 이상 처리할 frame이 없습니다.')

break # 프레임을 모두 처리하면 종료

# YOLOv3를 사용하여 객체 탐지 수행

returned_frame = get_detected_img(cv_net, img_frame, conf_threshold=conf_threshold, nms_threshold=nms_threshold, \

use_copied_array=False, is_print=is_print)

# 탐지 결과가 포함된 프레임을 출력 비디오에 저장

vid_writer.write(returned_frame)

# 비디오 파일 저장을 마무리

vid_writer.release()

cap.release()default_dir = '/content/DLCV'

do_detected_video(cv_net_yolo, os.path.join(default_dir, 'data/video/John_Wick_small.mp4'),

os.path.join(default_dir, 'data/output/John_Wick_small_yolo01.avi'), conf_threshold,

nms_threshold, True)- 입력 비디오 로드:

- cv2.VideoCapture(input_path)를 사용하여 지정된 비디오 파일을 읽습니다.

- 비디오 출력 설정:

- cv2.VideoWriter를 사용하여 출력 비디오 파일을 생성합니다. 입력 비디오의 해상도(vid_size)와 FPS(vid_fps)를 그대로 사용합니다.

- 프레임 반복 처리:

- cap.read()를 통해 비디오에서 프레임을 하나씩 읽습니다.

- 각 프레임에 대해 get_detected_img() 함수를 호출하여 YOLOv3를 사용한 객체 탐지를 수행합니다.

- 탐지된 객체가 포함된 프레임을 출력 비디오 파일에 저장합니다.

- 종료 처리:

- 더 이상 처리할 프레임이 없으면 루프를 종료하고, 비디오 파일을 저장한 후 자원을 해제합니다.

파라미터 설명

- cv_net: YOLOv3 네트워크 (모델).

- input_path: 입력 비디오 파일 경로.

- output_path: 결과를 저장할 출력 비디오 파일 경로.

- conf_threshold: 탐지 신뢰도 임계값.

- nms_threshold: Non-Maximum Suppression 임계값.

- is_print: 탐지 결과 출력 여부 (탐지 정보를 출력할지 여부 결정).

## Object Detection 적용된 영상 파일을 google drive에서 download 해야 합니다. 이를 위해 google drive를 colab에 mount 수행.

import os, sys

from google.colab import drive

drive.mount('/content/gdrive')## Object Detection 적용된 영상 파일을 google drive에서 download 해야 합니다.

## My Drive 디렉토리 이름에 공란이 있으므로 ' '로 묶습니다.

!cp /content/DLCV/data/output/John_Wick_small_yolo01.avi '/content/gdrive/My Drive/John_Wick_small_yolo01.avi'Tiny YOLO로 Object Detection 수행

tiny YOLO는 일반 YOLO보다는 성능이 떨어진다는 특징이 있습니다.

- tiny yolo의 pretrained된 weight파일은 wget https://pjreddie.com/media/files/yolov3-tiny.weights 에서 download 가능.

- config 파일은 wget https://github.com/pjreddie/darknet/blob/master/cfg/yolov3-tiny.cfg?raw=true -O ./yolov3-tiny.cfg 로 다운로드

import cv2

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import os

import time

# image 로드

default_dir = '/content/DLCV'

img = cv2.imread(os.path.join(default_dir, 'data/image/beatles01.jpg'))

#coco dataset 클래스명 매핑

import os

#가급적 절대 경로 사용.

#CUR_DIR = os.path.abspath('.')

# 코랩 버전은 아래 코드 사용

CUR_DIR = '/content/DLCV/Detection/yolo'

weights_path = os.path.join(CUR_DIR, 'pretrained/yolov3-tiny.weights')

config_path = os.path.join(CUR_DIR, 'pretrained/yolov3-tiny.cfg')

# tensorflow inference 모델 로딩

cv_net_yolo = cv2.dnn.readNetFromDarknet(config_path, weights_path)

#tiny yolo의 경우 confidence가 일반적으로 낮음.

conf_threshold = 0.3

nms_threshold = 0.4

# Object Detetion 수행 후 시각화

draw_img = get_detected_img(cv_net_yolo, img, conf_threshold=conf_threshold, nms_threshold=nms_threshold, use_copied_array=True, is_print=True)

img_rgb = cv2.cvtColor(draw_img, cv2.COLOR_BGR2RGB)

plt.figure(figsize=(12, 12))

plt.imshow(img_rgb)Tiny YOLO로 영상 Detection

default_dir = '/content/DLCV'

do_detected_video(cv_net_yolo, os.path.join(default_dir, 'data/video/John_Wick_small.mp4'),

os.path.join(default_dir, 'data/output/John_Wick_small_tiny_yolo01.avi'), conf_threshold,

nms_threshold, True)## Object Detection 적용된 영상 파일을 google drive에서 download 해야 합니다.

## My Drive 디렉토리 이름에 공란이 있으므로 ' '로 묶습니다.

!cp /content/DLCV/data/output/John_Wick_small_tiny_yolo01.avi '/content/gdrive/My Drive/John_Wick_small_tiny_yolo01.avi''👀 Computer Vision' 카테고리의 다른 글

| [CV] Object Detection Model Training시 유의사항 (0) | 2024.10.08 |

|---|---|

| [CV] Keras 기반 YOLO Open Source Package & Object Detection (0) | 2024.10.07 |

| [CV] OpenCV에서 YOLO를 이용한 Object Detection Part.1 (0) | 2024.07.15 |

| [CV] YOLO (You Only Look Once) (0) | 2024.07.14 |

| [CV] OpenCV DNN 패키지 & SSD 기반 Object Detection 수행 (0) | 2024.07.10 |